Fine-Tuning Gemma for Personality - Part 1: Why Fine-Tune a 6-Year-Old?

I taught an AI to talk like Bluey Heeler from the kids' show. Not through prompt engineering or RAG—through fine-tuning a small language model on 111 conversation examples. Five minutes of training on my MacBook. The model learned to mimic her speech patterns.

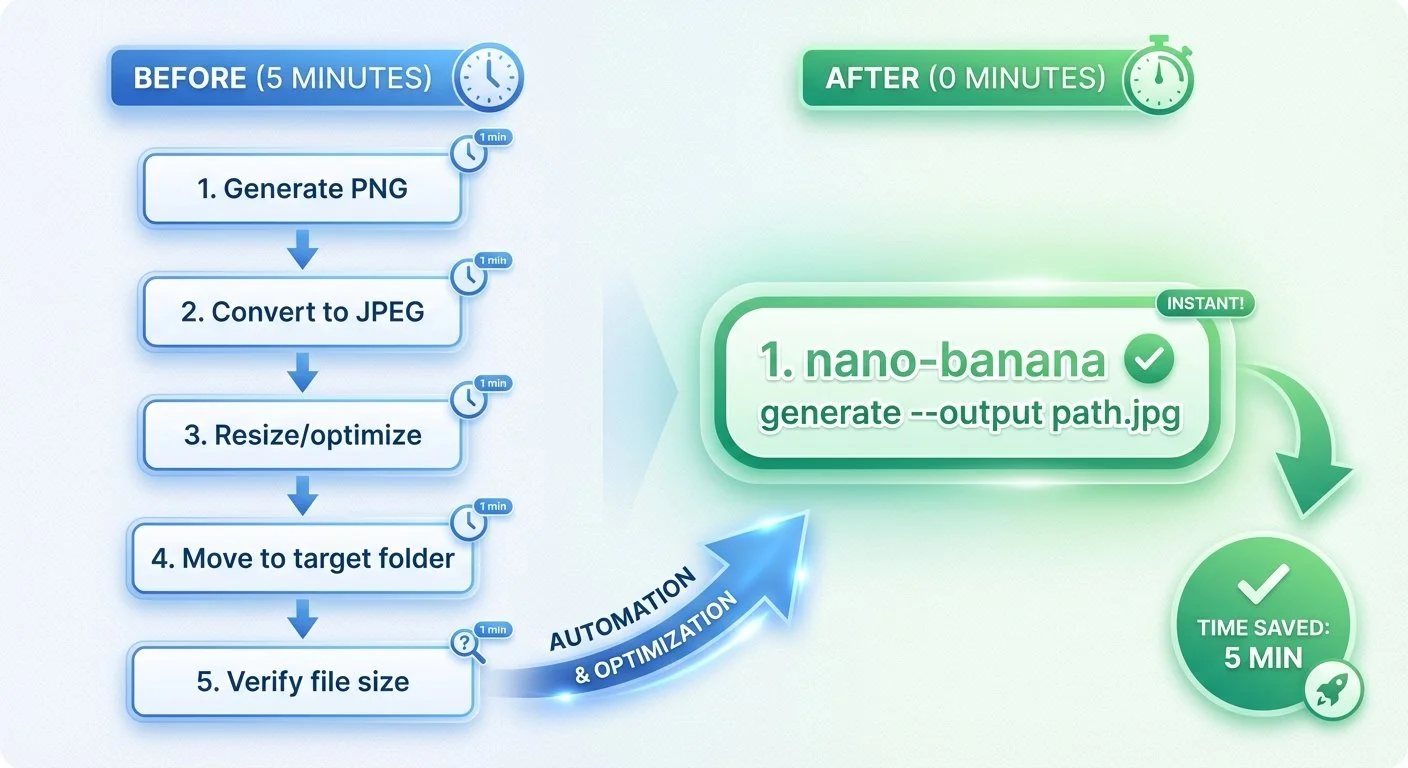

Removing Friction: Automating Nano Banana Image Workflows

Five minutes of manual work for every blog image—convert PNG to JPEG, resize, move to the right folder. While generating images for a 9-part blog series, I'd had enough. Twenty minutes of coding eliminated the friction.

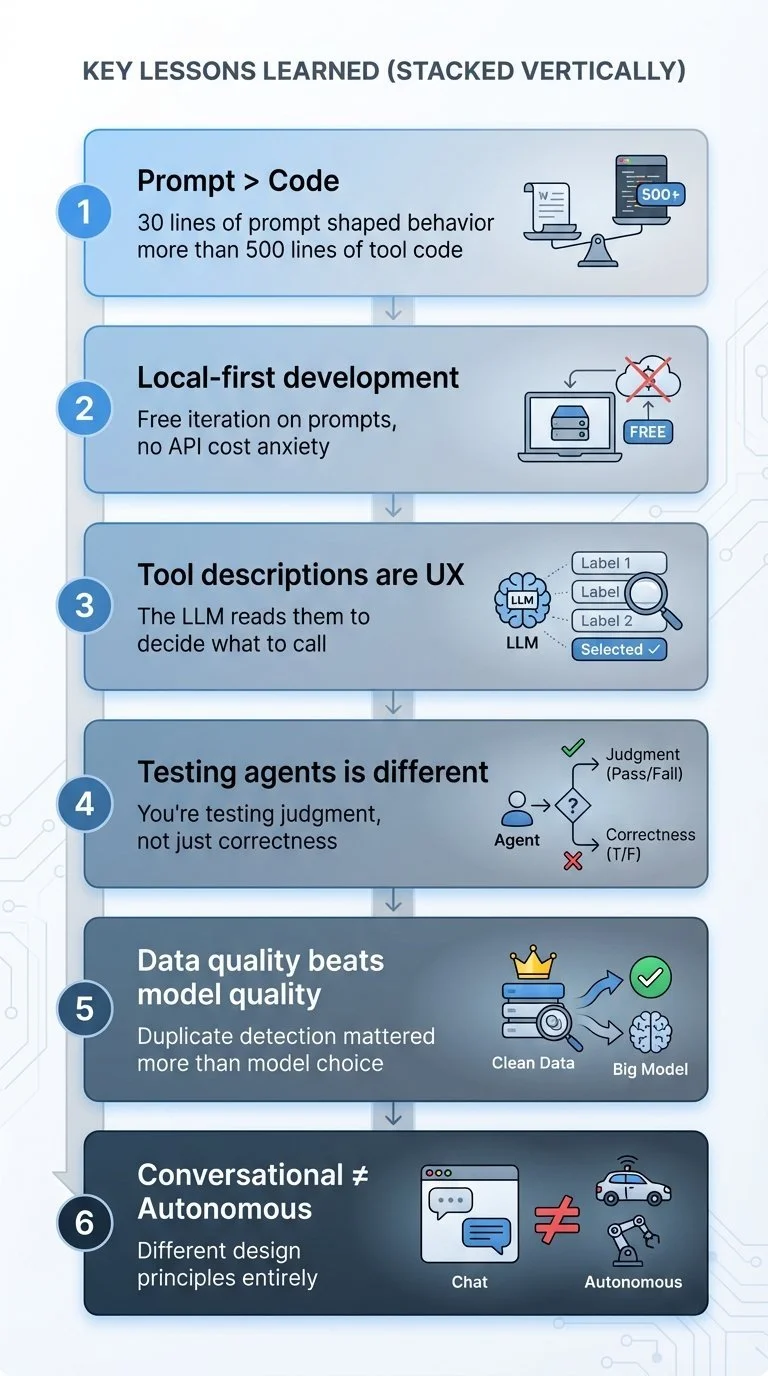

Building an Agentic Personal Trainer - Part 9: Lessons Learned

Nine posts later, what actually worked? What would I do differently? Here's my retrospective.

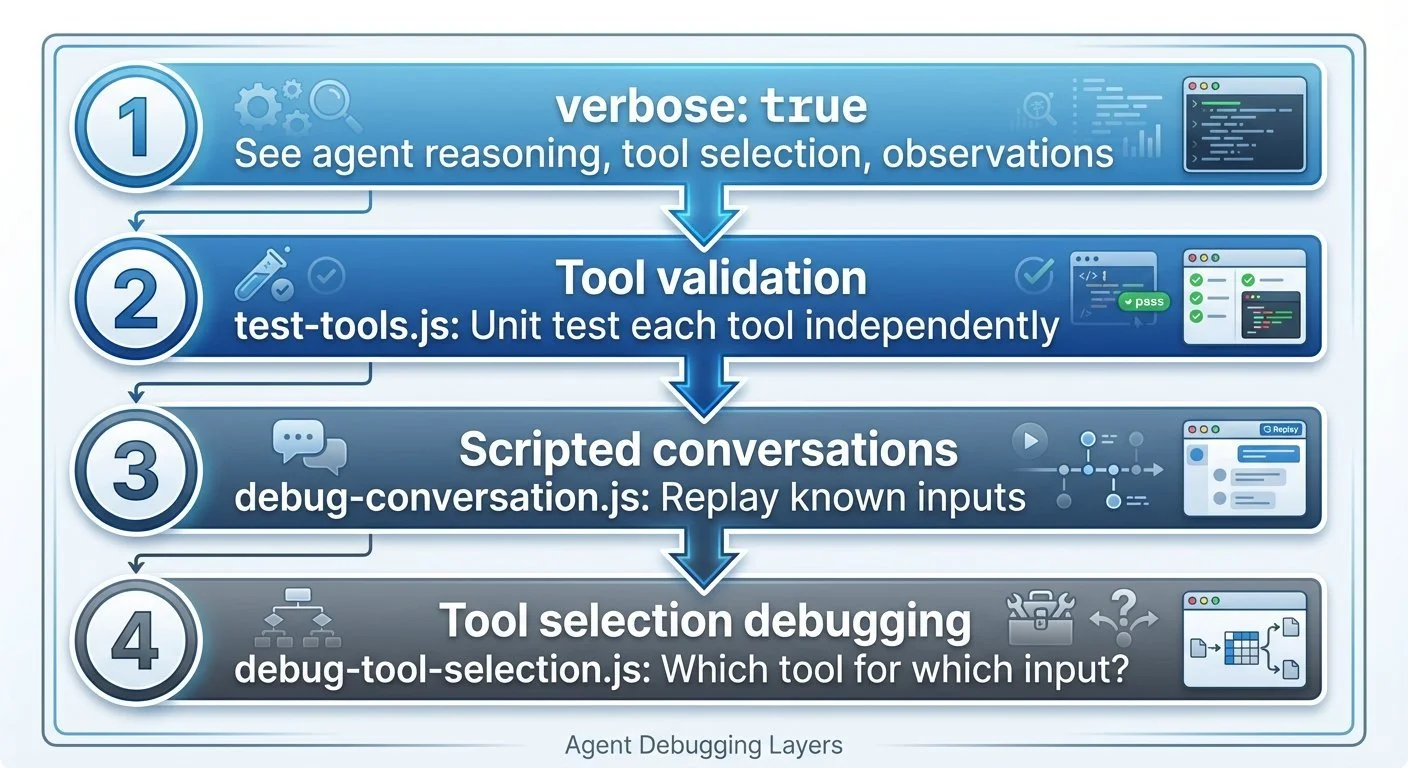

Building an Agentic Personal Trainer - Part 8: Testing and Debugging Agents

How do you test an AI agent? Unit tests don't cover "it gave bad advice." Verbose mode became my best debugging tool—watching the agent think out loud.

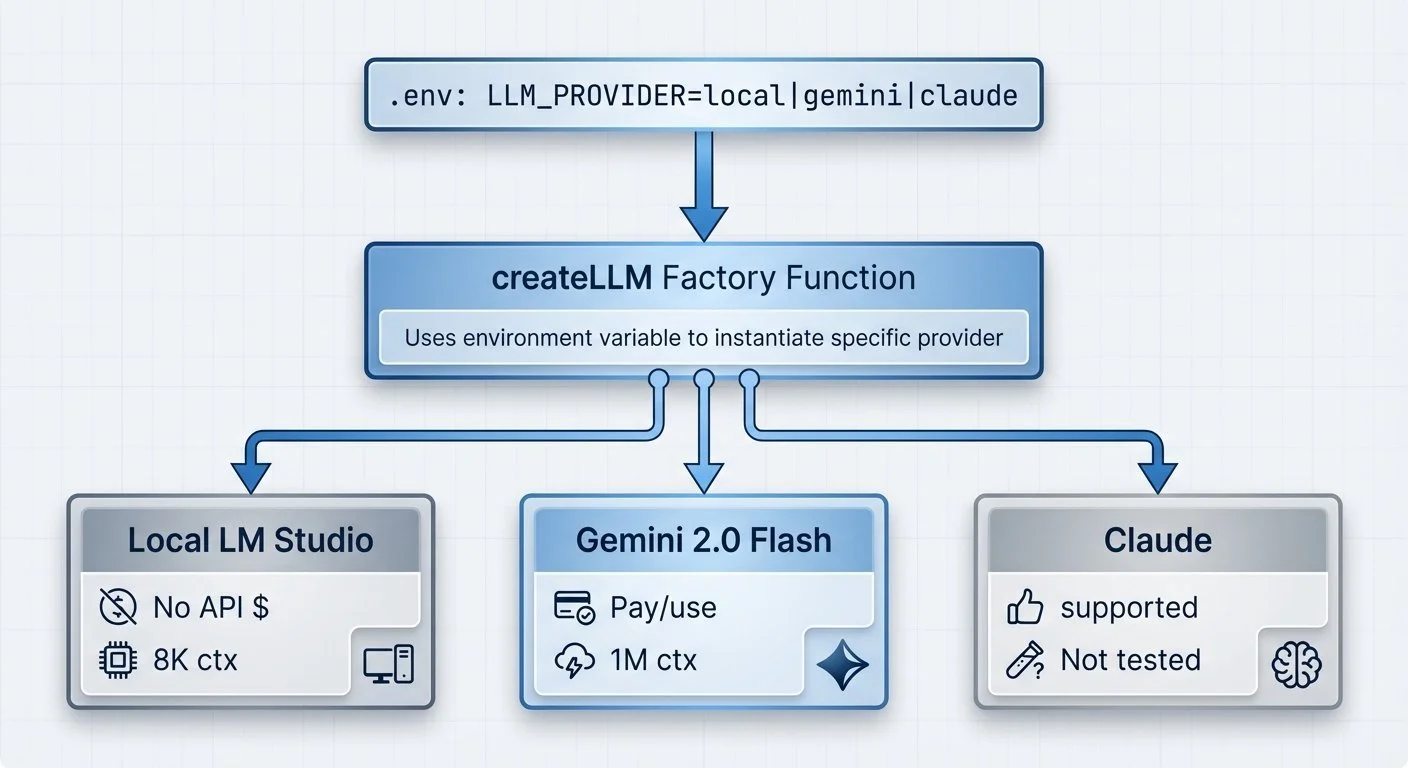

Building an Agentic Personal Trainer - Part 7: LLM Provider Abstraction

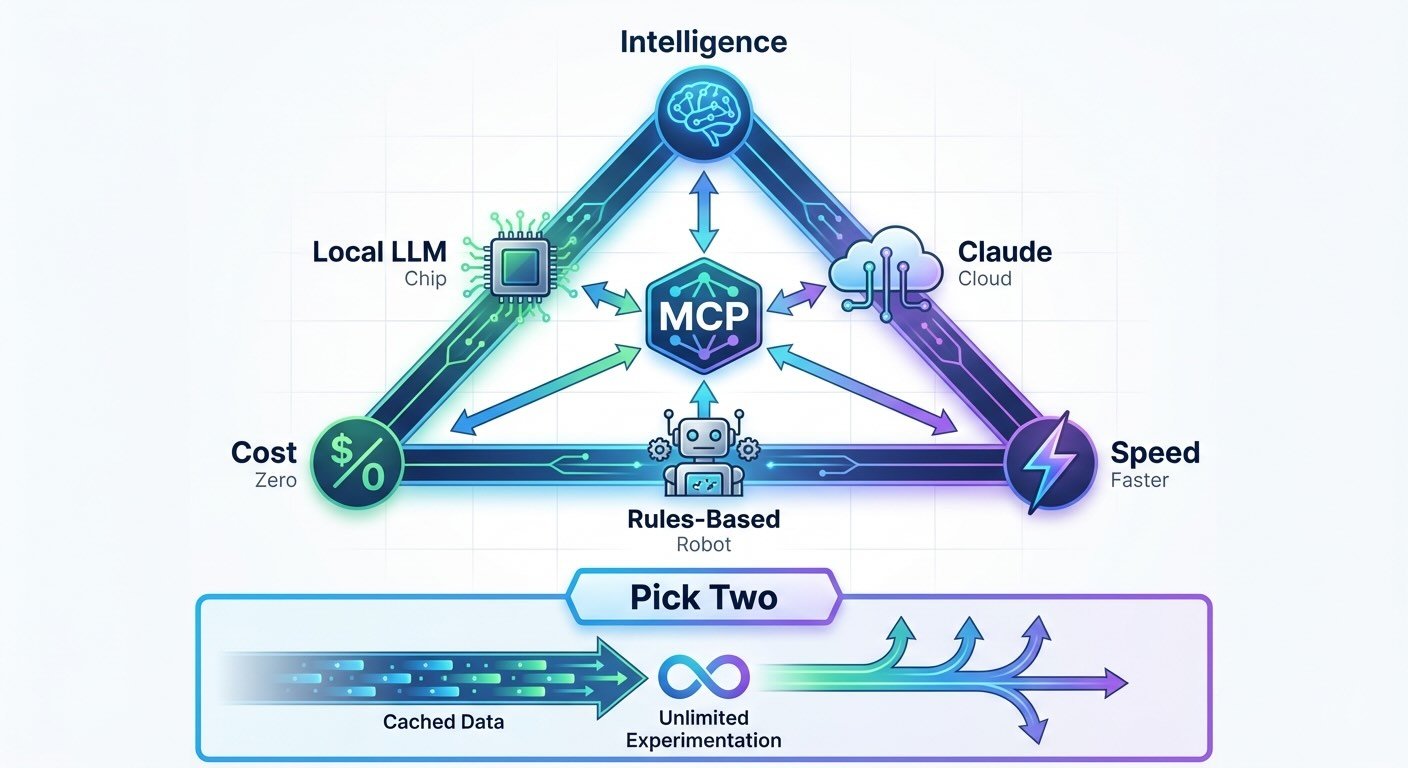

Running AI locally has no API costs—just electricity. Cloud providers charge per token. I wanted to switch between local and cloud models without rewriting my agent code.

Building an Agentic Personal Trainer - Part 6: Memory and LearningPart 6

"Didn't we talk about my knee yesterday?" If your AI coach can't remember last session, it's not coaching—it's starting over every time.

Building an Agentic Personal Trainer - Part 5: Smart Duplicate Detection

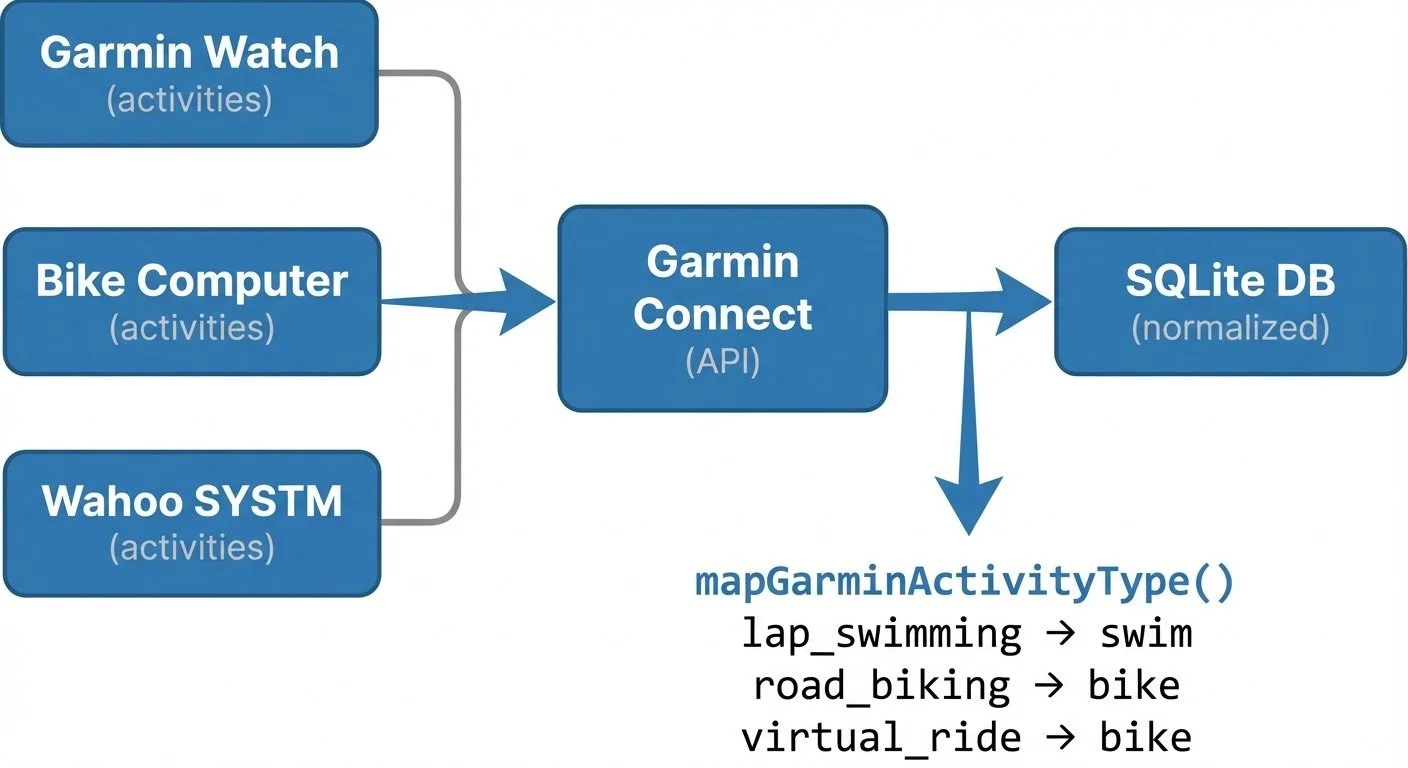

When I do an indoor bike workout, my bike computer records it. So does [Wahoo SYSTM](https://support.wahoofitness.com/hc/en-us/sections/5183647197586-SYSTM). Then both the bike computer and SYSTM sync to [Garmin Connect](https://connect.garmin.com/). Now I have two records of the same ride. My agent thinks I'm training twice as much as I am.

Building an Agentic Personal Trainer - Part 4: Garmin Integration

A personal trainer who doesn't know your recent workouts isn't very personal. The agent needed to connect to Garmin Connect—where my watch, bike computer, and other services like Wahoo SYSTM sync workout data automatically.

Building an Agentic Personal Trainer - Part 3: The System Prompt

Tools give the agent capabilities but the system prompt gives the agent its personality. Getting the tone right—in this case "coach, not drill sergeant"—requires iteration, opinion, and intuition, not just correct syntax.

Building an Agentic Personal Trainer - Part 2: The Tool System

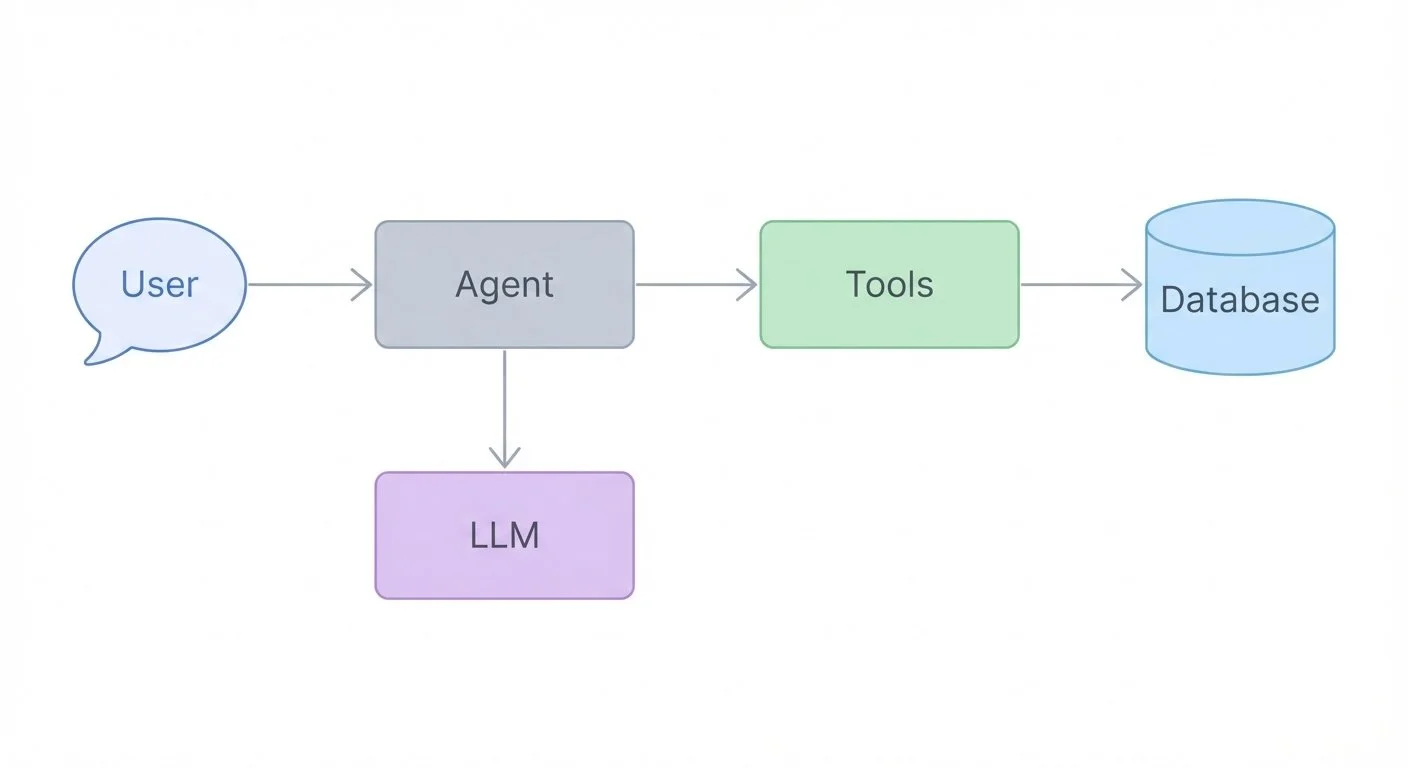

An LLM without tools is just a chatbot. To make a real coaching agent, I needed to give it hands—ways to check injuries, recall workouts, and manage schedules.

Building an Agentic Personal Trainer - Part 1: Architecture and Philosophy

After building an [autonomous stock trading system](https://www.mosaicmeshai.com/blog/building-an-mcp-agentic-stock-trading-system-part-1-the-architecture) with custom MCP servers, I wanted something different: a conversational AI that collaborates rather than executes. Something that asks "how are you feeling?" before suggesting a workout.

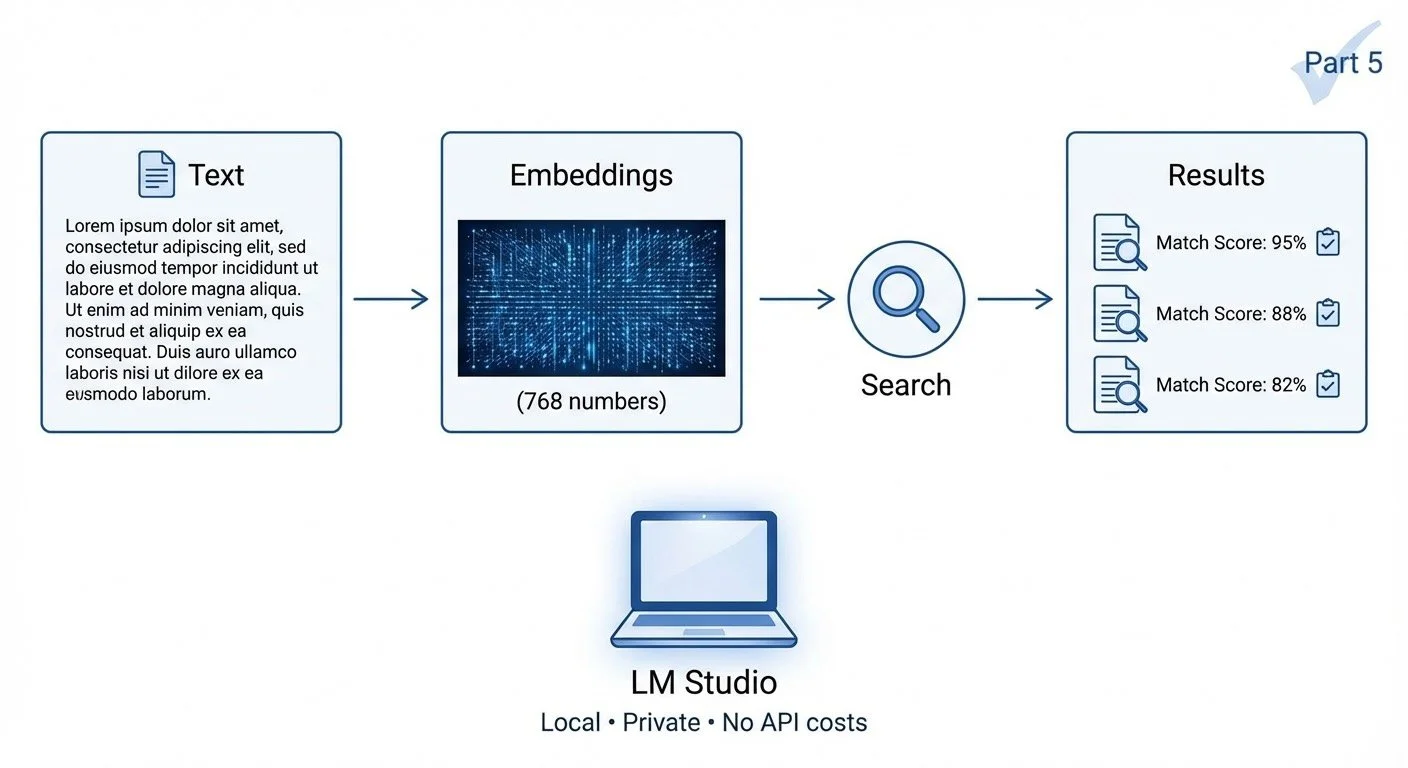

Building a Local Semantic Search Engine - Part 5: Learning by Building

Building a semantic search engine taught me more about embeddings than reading about them ever could. The real value wasn't the tool—it was understanding what those 768 numbers actually mean.

Building a Local Semantic Search Engine - Part 4: Caching for Speed

First search on a new directory: wait for every chunk to embed. A hundred chunks? A few seconds. A thousand? You're waiting—and burning electricity (or API dollars if you're using a cloud service). Second search: instant. The difference? A JSON file storing pre-computed vectors. Caching turned "wait for it" into "already done."

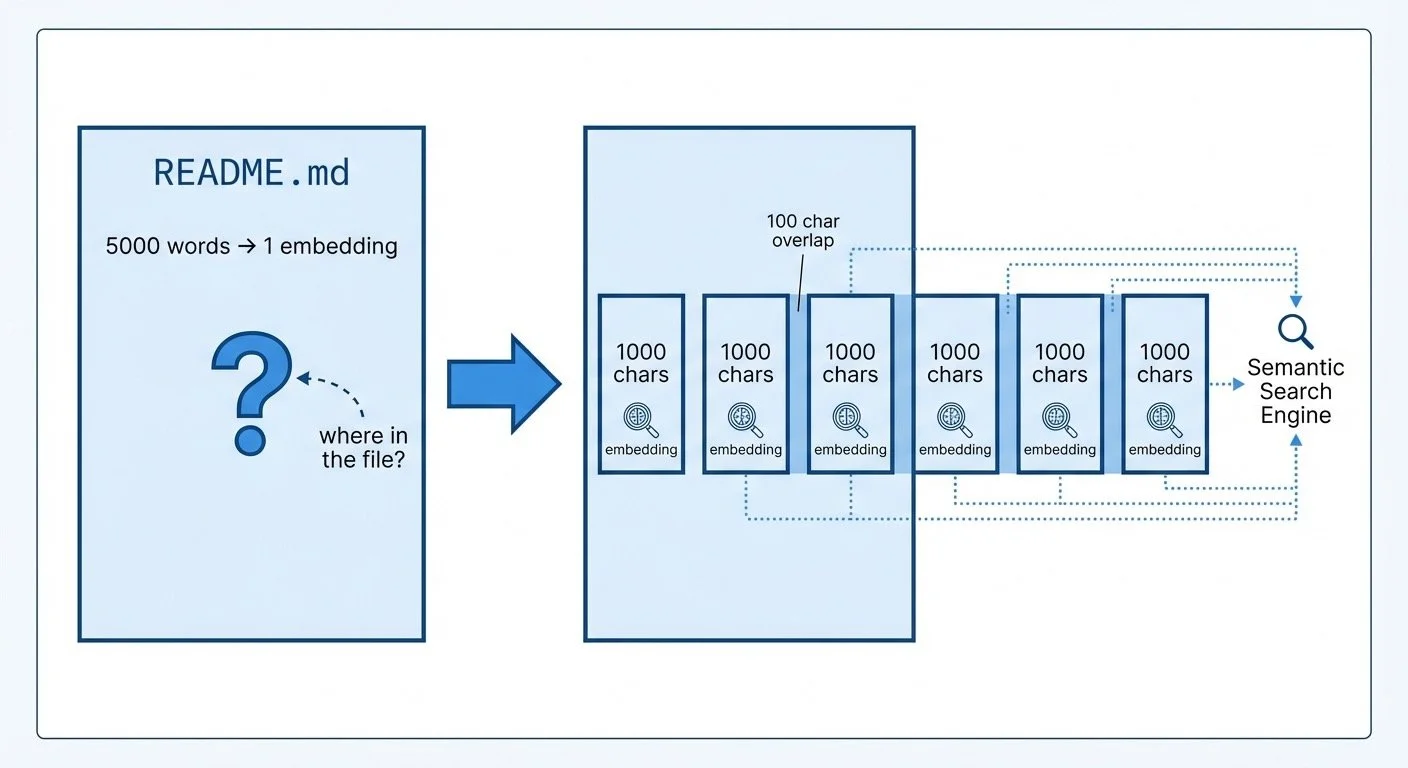

Building a Local Semantic Search Engine - Part 3: Indexing and Chunking

I pointed the search engine at itself—indexing the embeddinggemma project's own 3 files into 20 chunks. Why 20 chunks from 3 files? Because a 5,000-word README as a single embedding buries the relevant section. Chunking solves that.

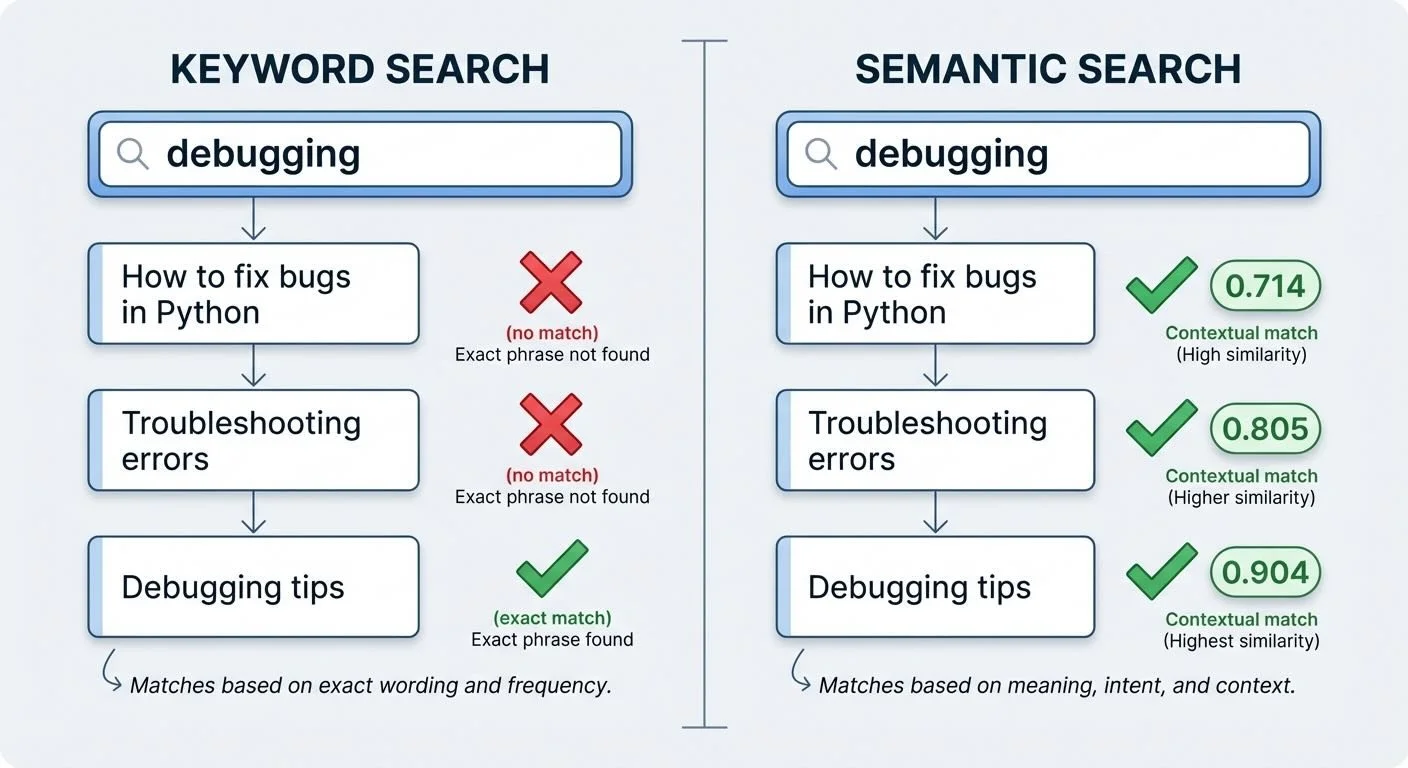

Building a Local Semantic Search Engine - Part 2: From Keywords to Meaning

Traditional search fails when you don't remember the exact words. Searching "debugging" won't find your notes about "fixing bugs." Semantic search finds them anyway—because it searches by meaning, not keywords.

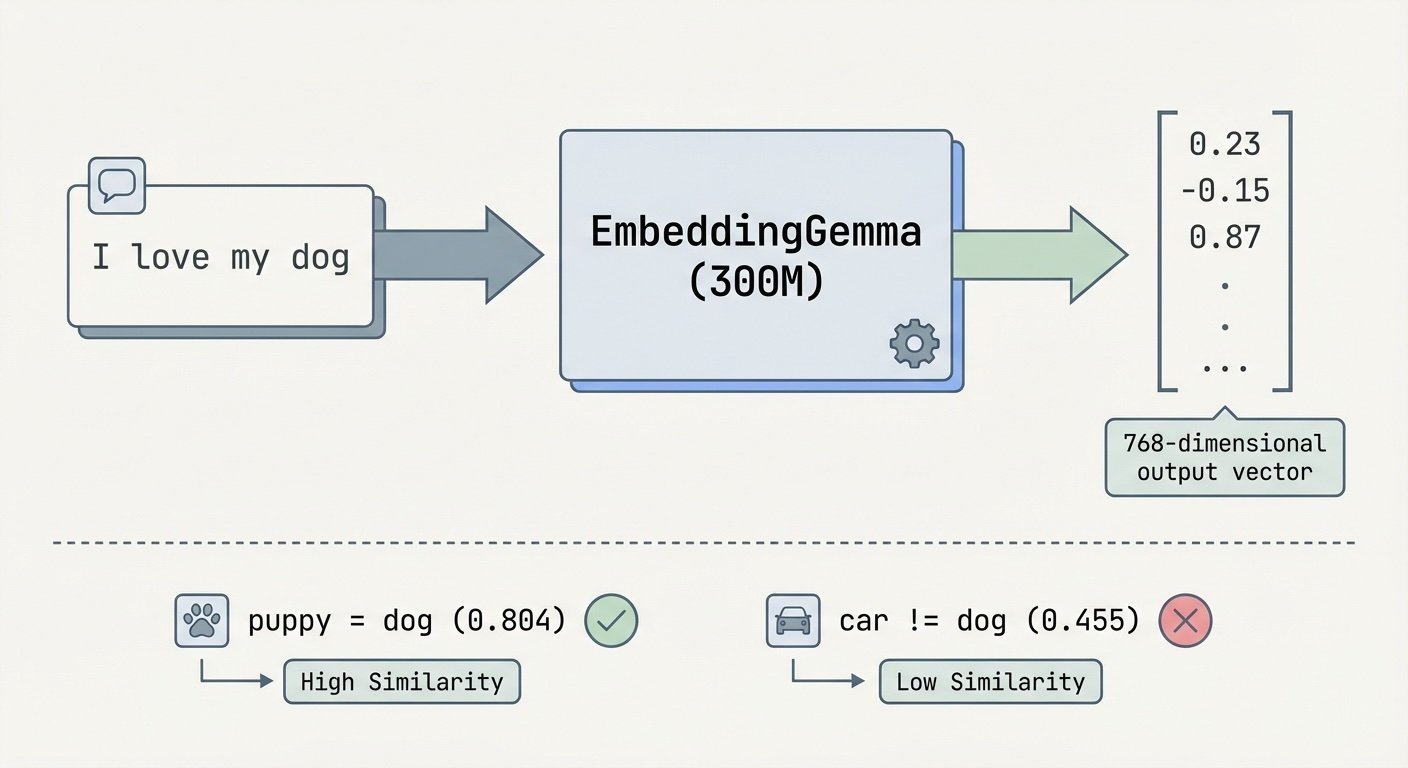

Building a Local Semantic Search Engine - Part 1: What Are Embeddings?

"I love playing with my dog" and "My puppy is so playful and fun" are 80.4% similar. Compare that to "Cars are expensive to maintain"—only 45.5% similar. How does a computer know that? Embeddings—and I wanted to run them entirely on my laptop.

Building an MCP Agentic Stock Trading System - Part 7: MCP Experimentation Lessons

After building three AI trading agents with MCP, here's what I'd do differently.

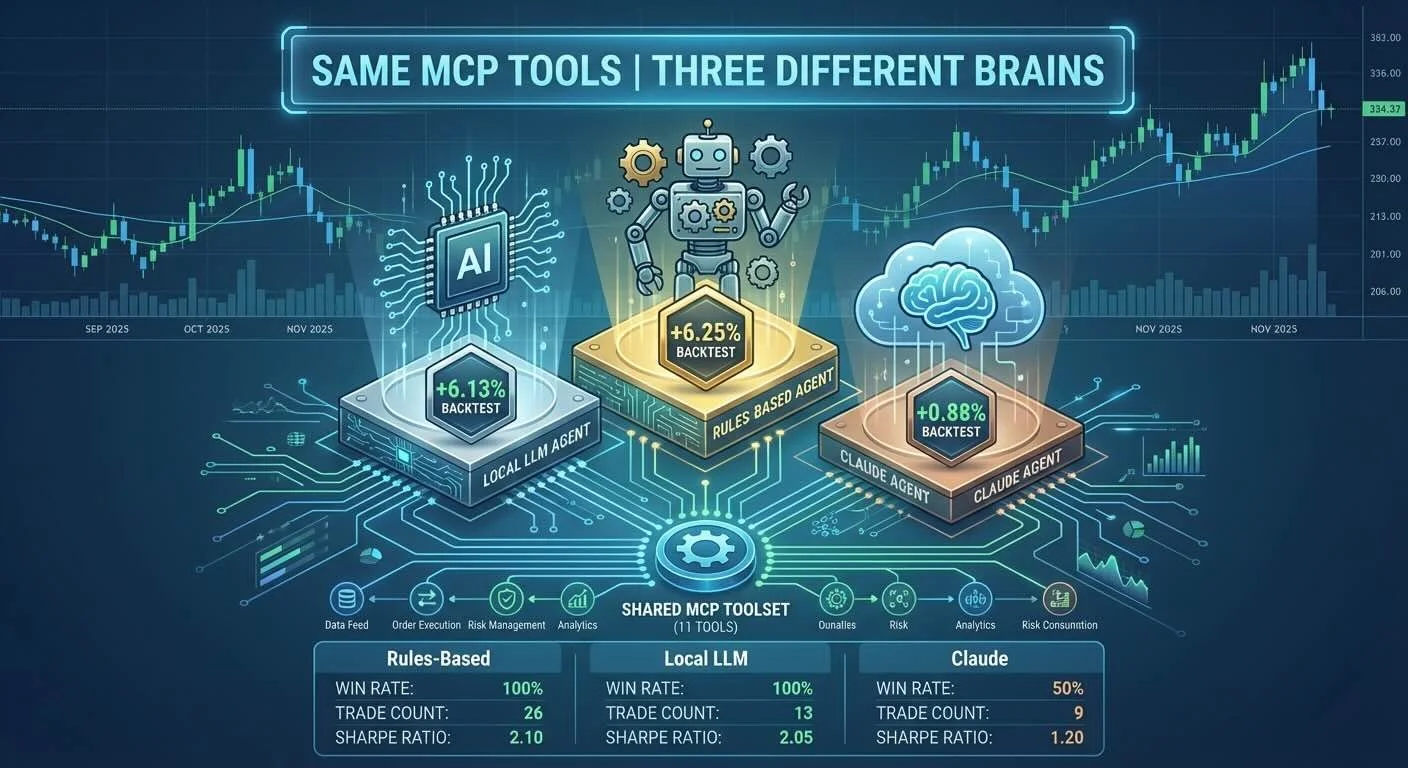

Building an MCP Agentic Stock Trading System - Part 6: Cloud vs Local vs Rules

Building with three agent types taught me: you can optimize for speed, cost, or intelligence—pick two.

Building an MCP Agentic Stock Trading System - Part 5: Backtesting All Three Agents

I ran all three agents over 2 months of real market data to see how MCP handles different "brains" with the same tools. The results surprised me—but not in the way I expected.

Building an MCP Agentic Stock Trading System - Part 4: When Agents Disagree

Three AI agents analyze Apple stock on the same day. Two reach the same conclusion through reasoning, one through arithmetic. What does this reveal about AI decision-making?