Did I Just Experience a >40X Productivity Gain? If Yes, What Does This Mean?

My daughter was in the back seat eating pizza, our dog settled beside her. I was driving our favorite route around the city lakes, the parkway lit up with city lights and Christmas decorations reflecting on the dark lake ice. Noise-cancelling AirPods in, listening to [Lenny's podcast about AI agents](https://www.lennysnewsletter.com/p/we-replaced-our-sales-team-with-20-ai-agents) for the second time.

About a week earlier during my first listen to this episode I could sense that the content was triggering some thoughts but I wasn't able to translate these thoughts into action. The second time through, I knew the conversation well enough that my mind could wander. I could let the things they were talking about generate connections. It was quiet, peaceful, the kind of drive where I could just think.

I'd been asking my wife to help me figure out how to reposition [Refrigerator Games](https://refrigeratorgames.mosaicmeshai.com/).[^1] What's running in production today is exactly what existed six years ago when I turned off the servers. Frozen in time. Unfortunately, my wife has been too busy to help so this project has just been sitting there waiting.

But driving around those lakes, I realized: I didn't need to wait. I'd been reading about [Simon Willison's experiments with Claude Code for the Web](https://simonwillison.net/2025/Oct/20/claude-code-for-web/) and I suddenly knew what to try.

How I Learned to Leverage Claude Code - Part 1: Resurrecting an 8-Year-Old Codebase

October 2025. I'd been staring at an 8-year-old Django codebase for years, wanting to resurrect it but never having the time. StartUpWebApp—my "general purpose" e-commerce platform forked from RefrigeratorGames—had been gathering dust since I shut it down to save hosting fees. I always felt like I'd left value on the table.

Then I discovered Claude Code CLI. After experimenting with AI coding tools (GitHub Copilot felt limited), Claude Code was my "ah-ha moment." I had new business ideas—RefrigeratorGames and CarbonMenu—and I needed infrastructure I could fork and iterate on rapidly. I didn't want to depend on someone else's platform. I wanted control.

So I tried: "Evaluate this repository and build a plan to modernize it and deploy to AWS."

Claude surprised me. It understood Django. It broke the work into phases. It thought like I would think. During that first session, I got excited. I could see how this might actually work.

I thought it would take 2-3 weeks. I had no idea.

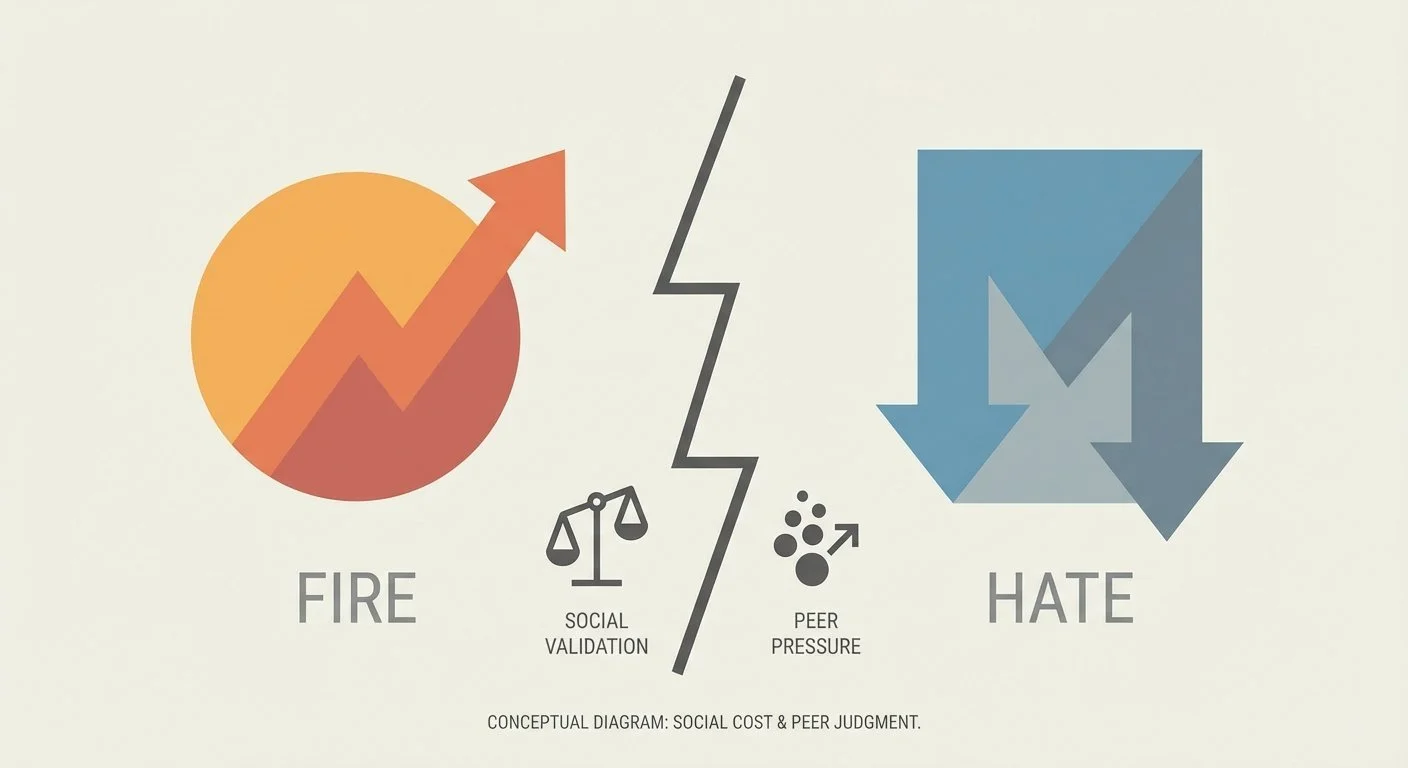

The Bart Test - Part 7: The Social Cost I Didn't See Coming

After analyzing Experiment 04's results in [Part 6](/blog/bart-test-part-6-the-american-ninja-warrior-problem), I designed Experiment 05 to test a hypothesis: Would tighter constraints improve differentiation, or make the test too easy?

I ran [Experiment 05](https://github.com/bart-mosaicmeshai/bart-test/tree/main/results/05_final_outputs). Printed the [evaluation sheets](https://github.com/bart-mosaicmeshai/bart-test/tree/main/evaluation_sheets/20251230). And prepared to find out.

Then I hit a wall I hadn't anticipated.

One judge was going to fill it out the next day and ask her friend to help. Then this judge told me: "[Friend] hates AI, so I reconsidered asking them."

The second judge was very clear: "Don't ask my friends to help with this!"

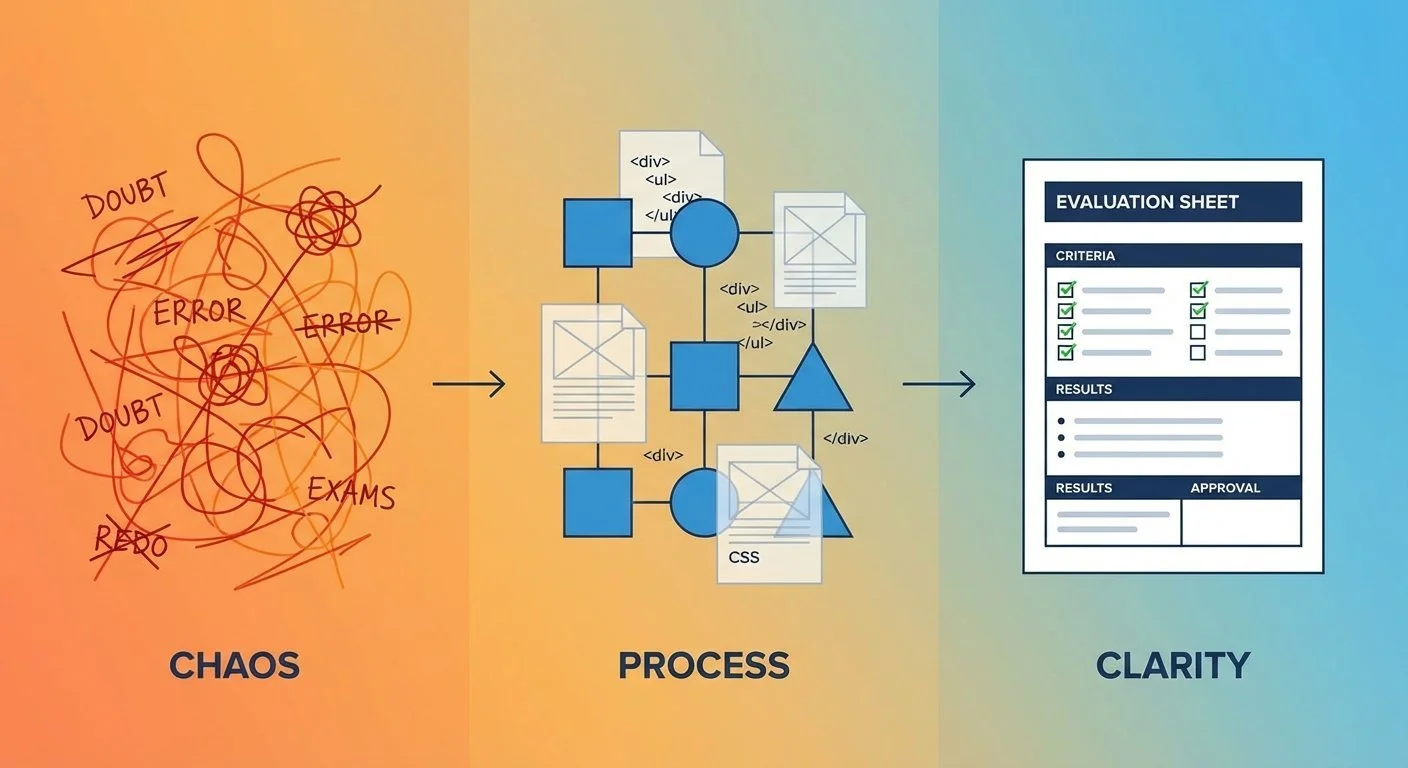

The Bart Test - Part 5: Redesigning From Scratch

After my teens ghosted the frontier model evaluation, I sat with a choice: give up on this whole thing, or try again.

The doubt was real. Maybe the Bart Test would never work. Maybe asking teenagers to evaluate AI-generated slang was fundamentally flawed. But I couldn't shake the insights from [Part 3](/blog/bart-test-part-3-the-zoo-not-duck-problem)—the "zoo not duck" problem, the slang half-life, the "trying too hard" pattern. Those felt real.

So I decided to try again. Not because I was confident it would work, but because I wasn't ready to give up.

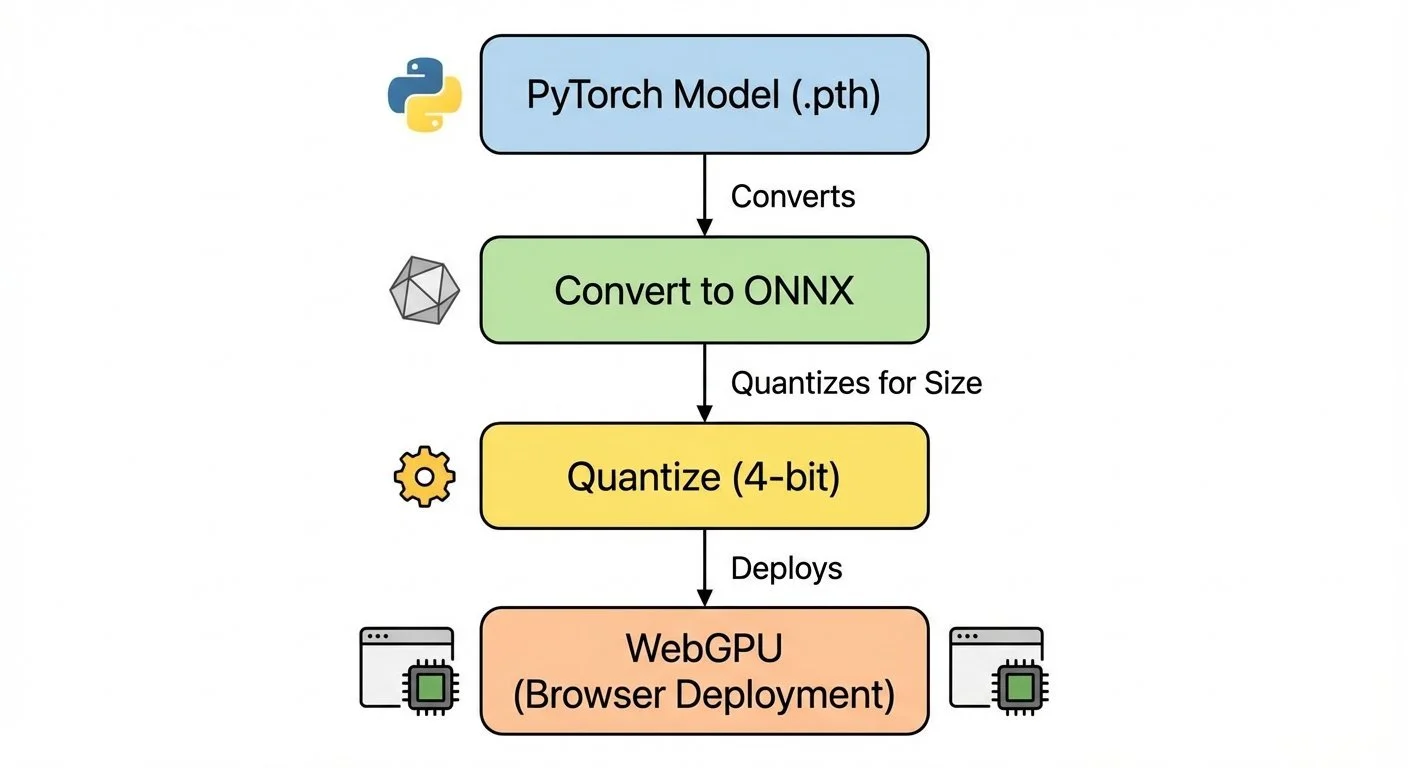

"Fine-Tuning Gemma for Personality - Part 7: From PyTorch to Browser

Browser-based inference with a fine-tuned model. No backend server, no API keys, no monthly fees. Just client-side WebGPU running entirely on your device.

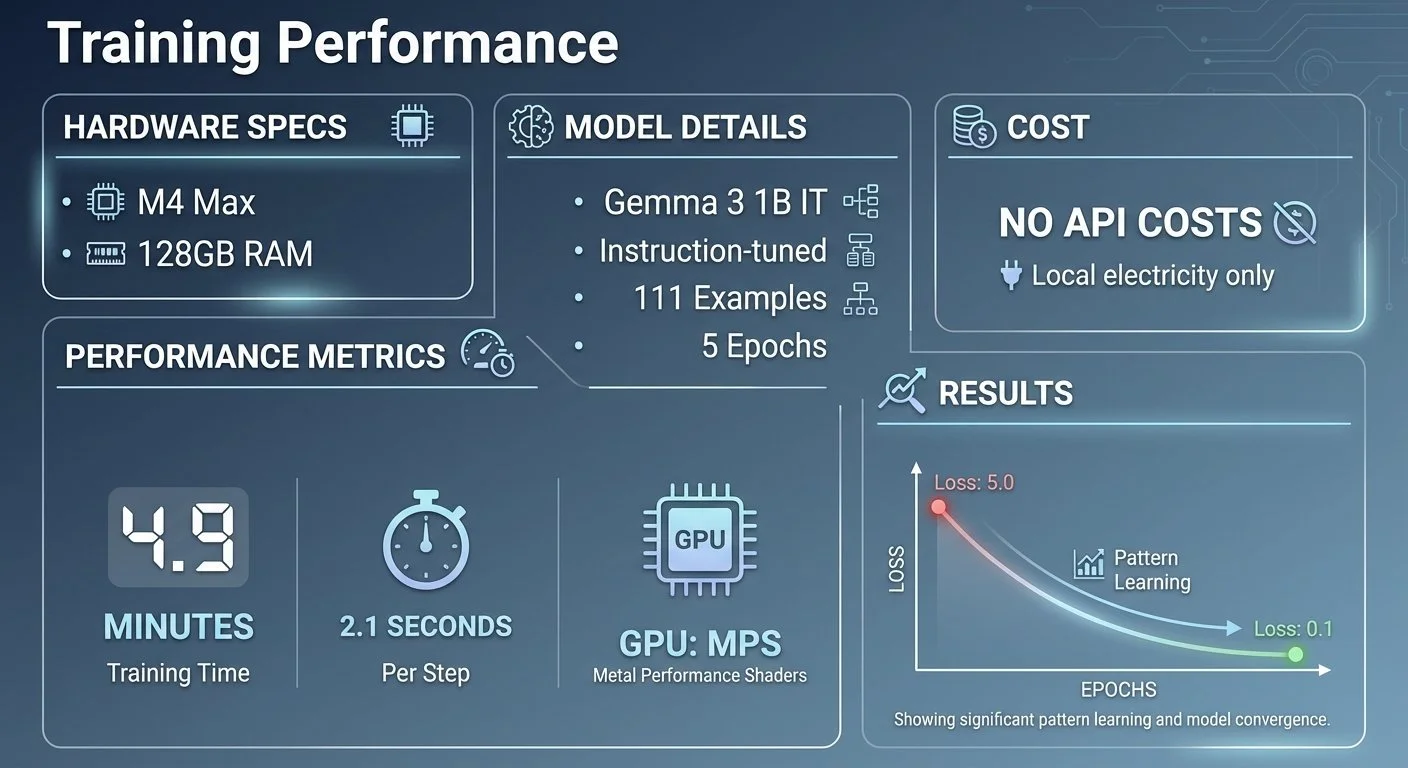

Fine-Tuning Gemma for Personality - Part 3: Training on Apple Silicon

Five minutes and no API costs to fine-tune a 1 billion parameter language model on my laptop. No cloud GPUs, no monthly fees, no time limits. Just Apple Silicon and PyTorch.

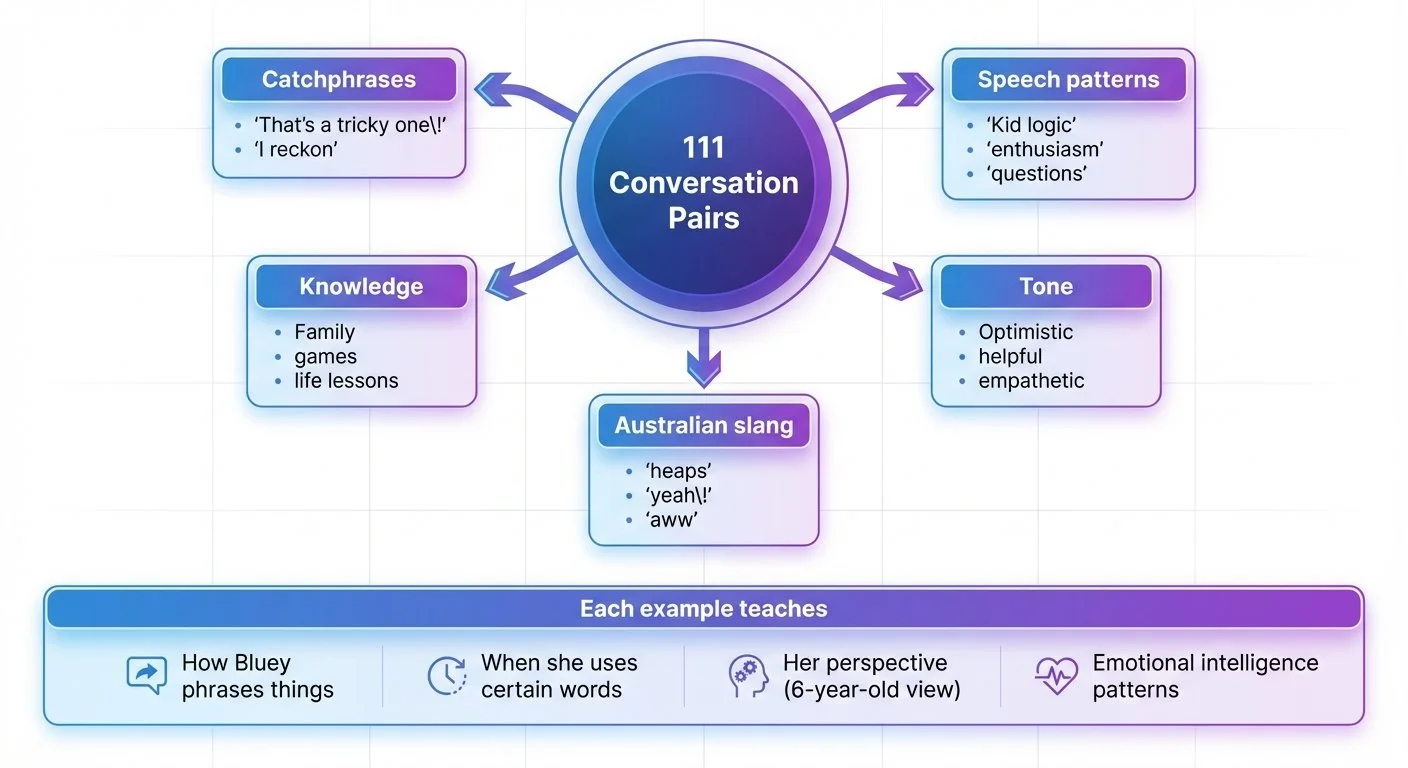

Fine-Tuning Gemma for Personality - Part 2: Building the Training Dataset

One hundred eleven conversations. That's what it took to demonstrate personality-style learning. Not thousands—just 111 AI-generated examples of how she talks, thinks, and helps.

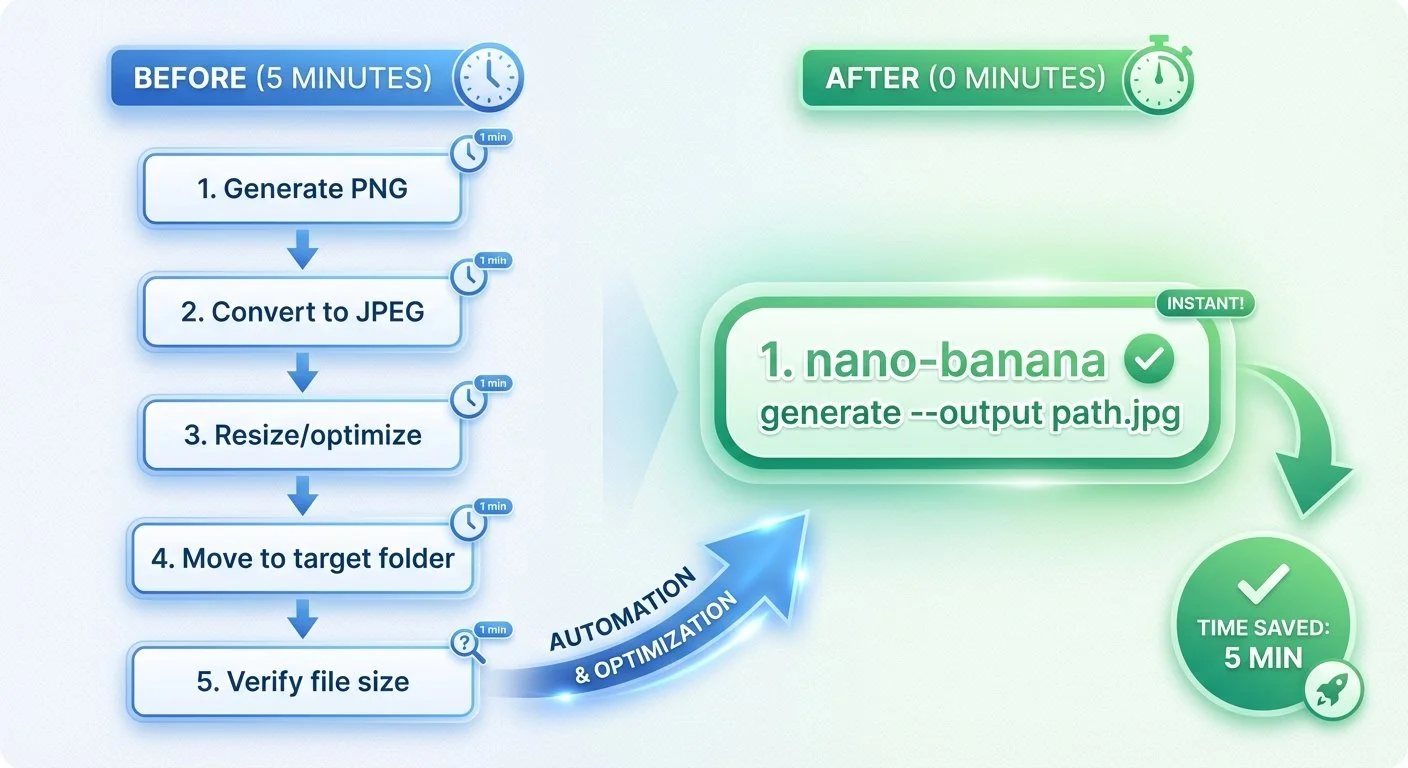

Removing Friction: Automating Nano Banana Image Workflows

Five minutes of manual work for every blog image—convert PNG to JPEG, resize, move to the right folder. While generating images for a 9-part blog series, I'd had enough. Twenty minutes of coding eliminated the friction.

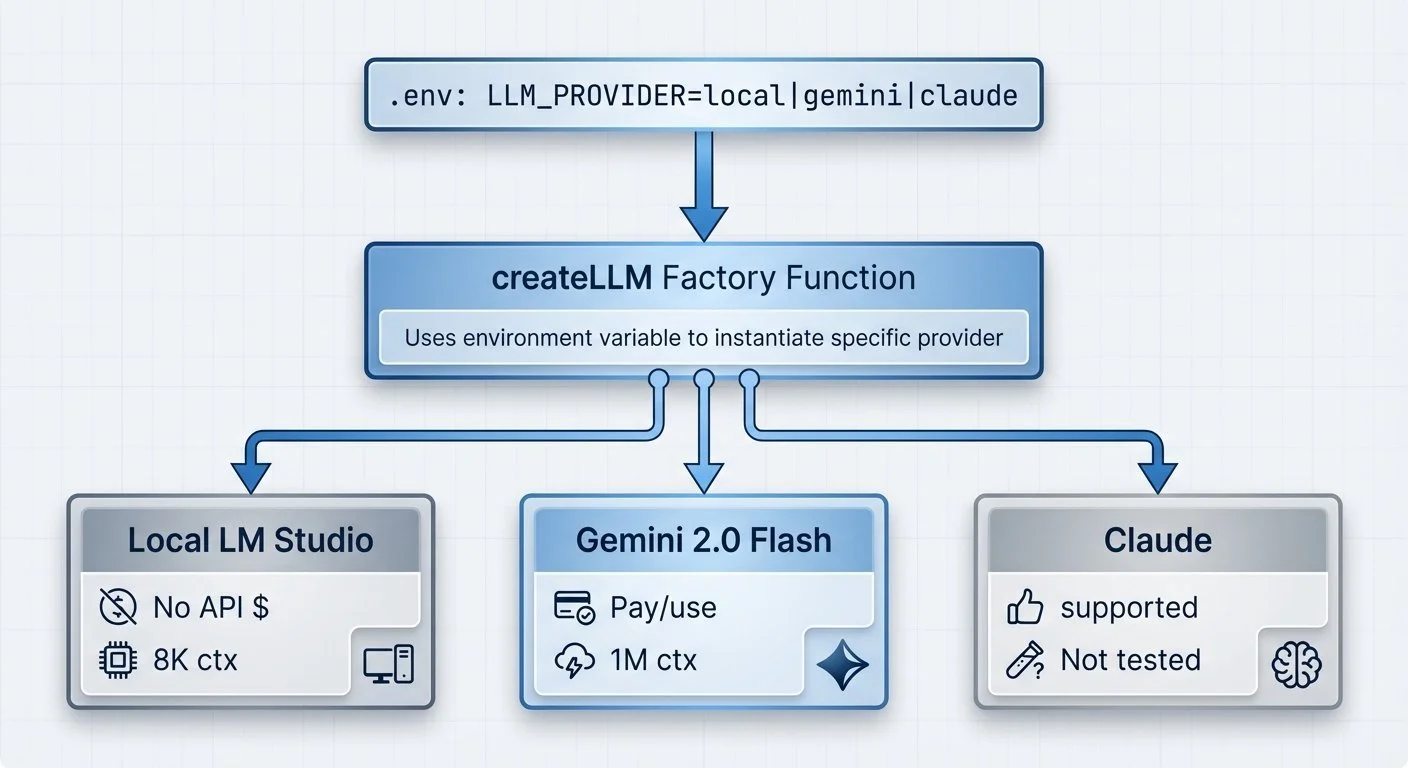

Building an Agentic Personal Trainer - Part 7: LLM Provider Abstraction

Running AI locally has no API costs—just electricity. Cloud providers charge per token. I wanted to switch between local and cloud models without rewriting my agent code.

Building an Agentic Personal Trainer - Part 6: Memory and LearningPart 6

"Didn't we talk about my knee yesterday?" If your AI coach can't remember last session, it's not coaching—it's starting over every time.

Building an Agentic Personal Trainer - Part 5: Smart Duplicate Detection

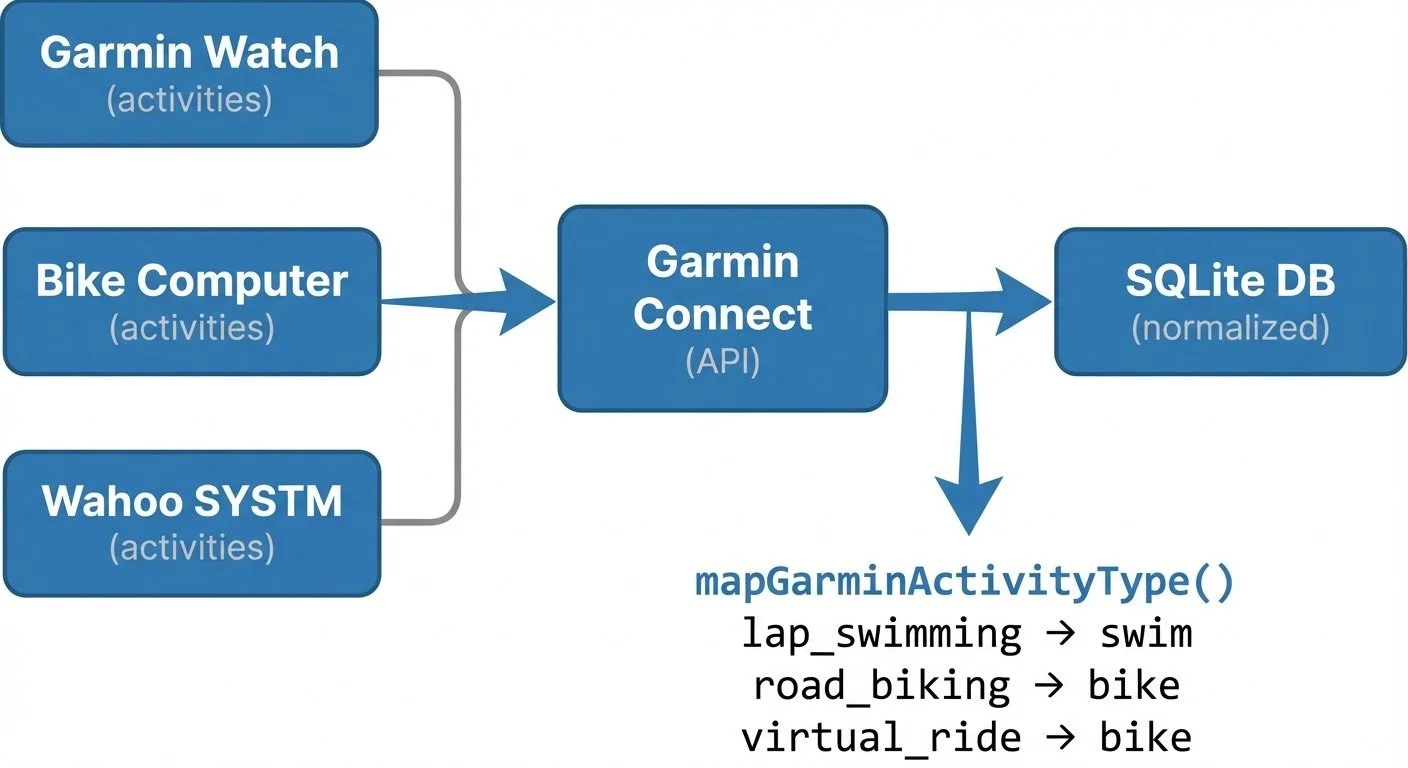

When I do an indoor bike workout, my bike computer records it. So does [Wahoo SYSTM](https://support.wahoofitness.com/hc/en-us/sections/5183647197586-SYSTM). Then both the bike computer and SYSTM sync to [Garmin Connect](https://connect.garmin.com/). Now I have two records of the same ride. My agent thinks I'm training twice as much as I am.

Building an Agentic Personal Trainer - Part 4: Garmin Integration

A personal trainer who doesn't know your recent workouts isn't very personal. The agent needed to connect to Garmin Connect—where my watch, bike computer, and other services like Wahoo SYSTM sync workout data automatically.

Building an Agentic Personal Trainer - Part 2: The Tool System

An LLM without tools is just a chatbot. To make a real coaching agent, I needed to give it hands—ways to check injuries, recall workouts, and manage schedules.

Building a Local Semantic Search Engine - Part 3: Indexing and Chunking

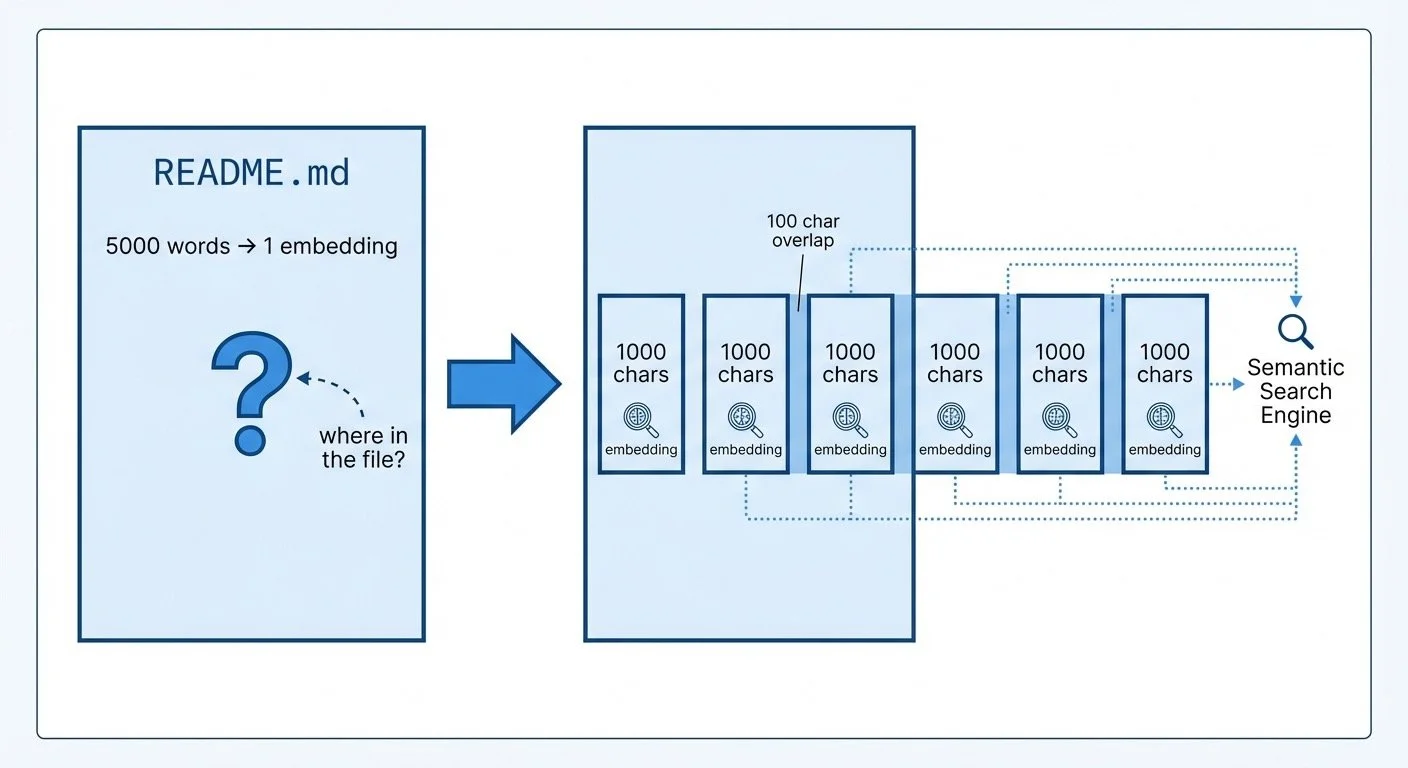

I pointed the search engine at itself—indexing the embeddinggemma project's own 3 files into 20 chunks. Why 20 chunks from 3 files? Because a 5,000-word README as a single embedding buries the relevant section. Chunking solves that.

Adding nano-banana 3 Support to My CLI Wrapper

Twenty-four hours after Google dropped nano-banana 3, I shipped support for it. New model, new resolutions (4K!), new features. This is what building with AI is like at this point in November 2025.