Did I Just Experience a >40X Productivity Gain? If Yes, What Does This Mean?

My daughter was in the back seat eating pizza, our dog settled beside her. I was driving our favorite route around the city lakes, the parkway lit up with city lights and Christmas decorations reflecting on the dark lake ice. Noise-cancelling AirPods in, listening to [Lenny's podcast about AI agents](https://www.lennysnewsletter.com/p/we-replaced-our-sales-team-with-20-ai-agents) for the second time.

About a week earlier during my first listen to this episode I could sense that the content was triggering some thoughts but I wasn't able to translate these thoughts into action. The second time through, I knew the conversation well enough that my mind could wander. I could let the things they were talking about generate connections. It was quiet, peaceful, the kind of drive where I could just think.

I'd been asking my wife to help me figure out how to reposition [Refrigerator Games](https://refrigeratorgames.mosaicmeshai.com/).[^1] What's running in production today is exactly what existed six years ago when I turned off the servers. Frozen in time. Unfortunately, my wife has been too busy to help so this project has just been sitting there waiting.

But driving around those lakes, I realized: I didn't need to wait. I'd been reading about [Simon Willison's experiments with Claude Code for the Web](https://simonwillison.net/2025/Oct/20/claude-code-for-web/) and I suddenly knew what to try.

How I Learned to Leverage Claude Code - Part 1: Resurrecting an 8-Year-Old Codebase

October 2025. I'd been staring at an 8-year-old Django codebase for years, wanting to resurrect it but never having the time. StartUpWebApp—my "general purpose" e-commerce platform forked from RefrigeratorGames—had been gathering dust since I shut it down to save hosting fees. I always felt like I'd left value on the table.

Then I discovered Claude Code CLI. After experimenting with AI coding tools (GitHub Copilot felt limited), Claude Code was my "ah-ha moment." I had new business ideas—RefrigeratorGames and CarbonMenu—and I needed infrastructure I could fork and iterate on rapidly. I didn't want to depend on someone else's platform. I wanted control.

So I tried: "Evaluate this repository and build a plan to modernize it and deploy to AWS."

Claude surprised me. It understood Django. It broke the work into phases. It thought like I would think. During that first session, I got excited. I could see how this might actually work.

I thought it would take 2-3 weeks. I had no idea.

The Bart Test - Part 10: The Stochastic Parrot and What Visible Thinking Traces Might Reveal

Throughout this series, I've framed OLMo's behavior as "overthinking" - doing explicit planning that humans skip. But what if that's wrong?

What if OLMo's visible thinking traces are just making explicit what human brains do unconsciously?

The Efficiency Trap: What We Lose When AI Optimizes Everything

I've spent 28 years in the trenches of tech—*engineer, engineering manager, program manager, product leader, enterpreneur, consultant*. I've lived through the booms, the busts, and the disruptions. But lately, I'm finding it harder to stay on the side of "tech-optimist" that has been part of my identify for all of these years.

Through Mosaic Mesh AI, I help people use this technology. I'm an "Applied AI" guy. But if I'm honest, I'm carrying tension that doesn't make it into my marketing copy.

The Bart Test - Part 9: The Question I Couldn't Answer

After questioning whether the Bart Test was worth continuing, I finally got the Experiment 05 evaluation sheets back.

I glanced at the scores and saw lots of 8s, 9s, and 10s.

My first reaction wasn't excitement. It was concern.

The Bart Test - Part 8: When an Interesting Experiment Might Not Be a Useful Tool (And Why That's Okay)

I'm at a crossroads with the Bart Test. I could probably continue:

- ✅ Process improvements are working (paper sheets, batch evaluation)

- ✅ Could recruit other teens (pay them $5-10 per judging session)

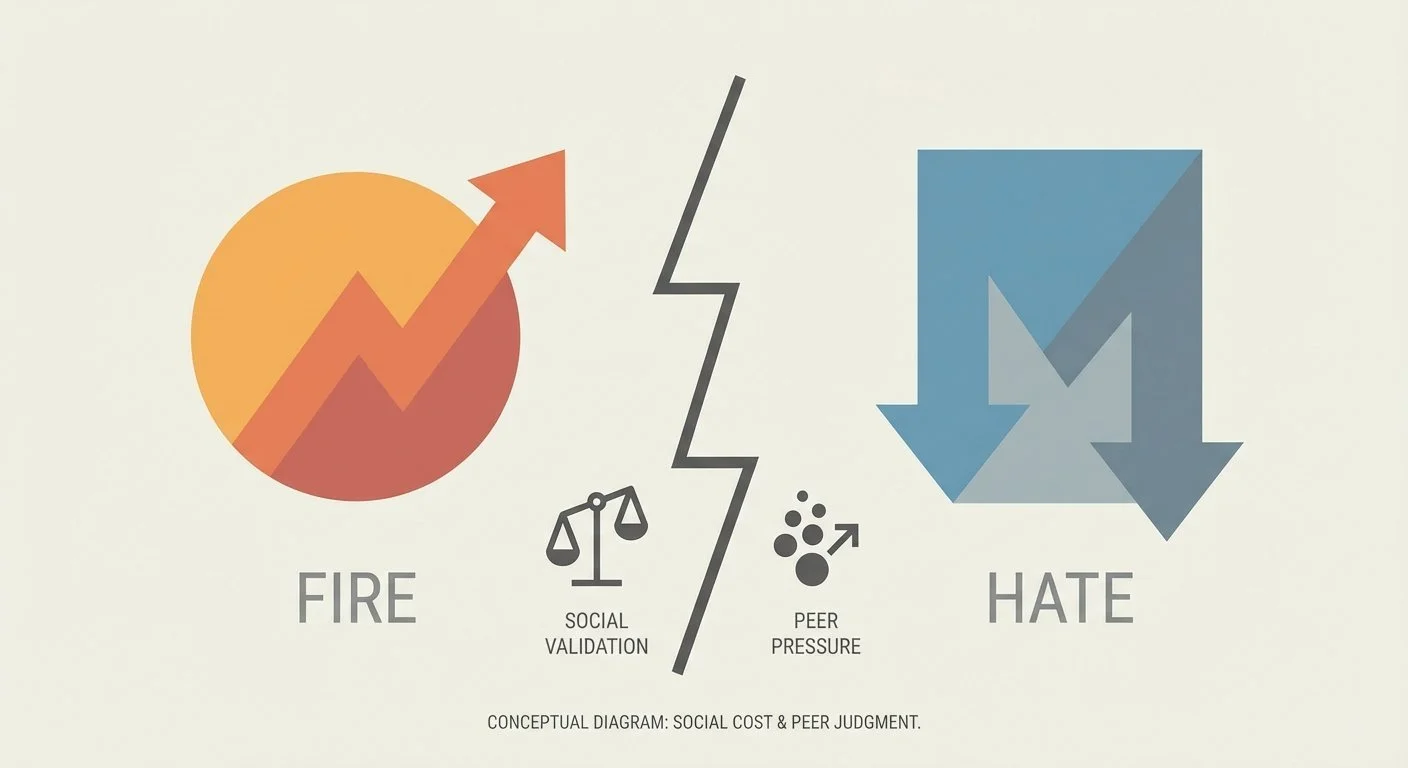

- ✅ Social cost is real but solvable (find teens who think AI is fire)

- ✅ Logistics are manageable (quarterly sessions, not per-model)

But I keep coming back to one question: What would someone DO with these results?

If GPT-5.2 scores 7/10 on cultural fluency and Claude Opus 4.5 scores 6/10... so what? Would a company, developer, or user choose a different model based on that? Should they?

I can't answer that question. And that has me questioning the value of continuing to pursue the Bart Test.

The Bart Test - Part 7: The Social Cost I Didn't See Coming

After analyzing Experiment 04's results in [Part 6](/blog/bart-test-part-6-the-american-ninja-warrior-problem), I designed Experiment 05 to test a hypothesis: Would tighter constraints improve differentiation, or make the test too easy?

I ran [Experiment 05](https://github.com/bart-mosaicmeshai/bart-test/tree/main/results/05_final_outputs). Printed the [evaluation sheets](https://github.com/bart-mosaicmeshai/bart-test/tree/main/evaluation_sheets/20251230). And prepared to find out.

Then I hit a wall I hadn't anticipated.

One judge was going to fill it out the next day and ask her friend to help. Then this judge told me: "[Friend] hates AI, so I reconsidered asking them."

The second judge was very clear: "Don't ask my friends to help with this!"

The Bart Test - Part 6: The American Ninja Warrior Problem

The process validation from [Part 5](/blog/bart-test-part-5-redesigning-from-scratch) worked. Judges completed the paper sheets in about 10 minutes. They engaged with it. I got detailed feedback.

When I sat down to analyze the [completed ratings](https://github.com/bart-mosaicmeshai/bart-test/blob/main/evaluation_sheets/20251228/completed_ratings_20251228.json) from Experiment 04, the patterns were clear. But I realized I was at risk of misinterpreting what they meant.

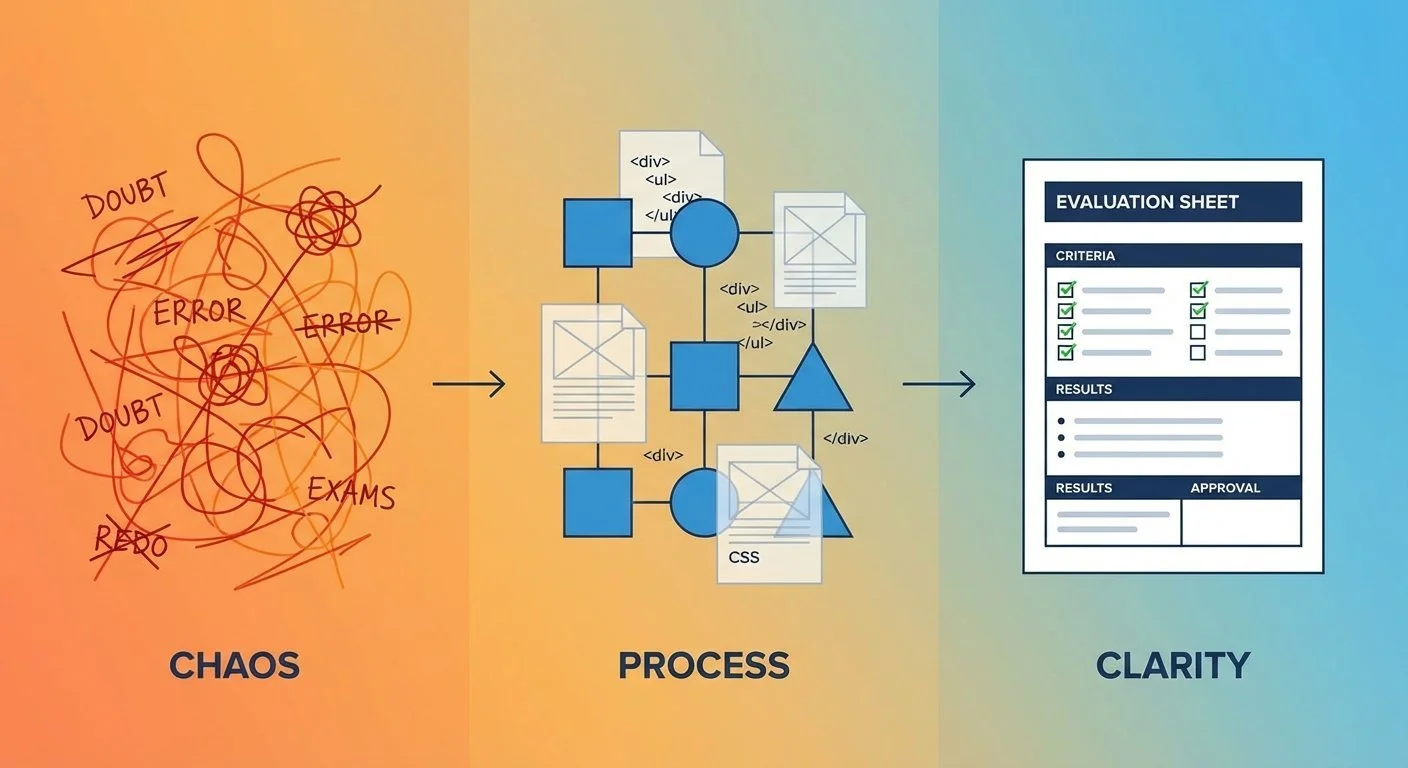

The Bart Test - Part 5: Redesigning From Scratch

After my teens ghosted the frontier model evaluation, I sat with a choice: give up on this whole thing, or try again.

The doubt was real. Maybe the Bart Test would never work. Maybe asking teenagers to evaluate AI-generated slang was fundamentally flawed. But I couldn't shake the insights from [Part 3](/blog/bart-test-part-3-the-zoo-not-duck-problem)—the "zoo not duck" problem, the slang half-life, the "trying too hard" pattern. Those felt real.

So I decided to try again. Not because I was confident it would work, but because I wasn't ready to give up.

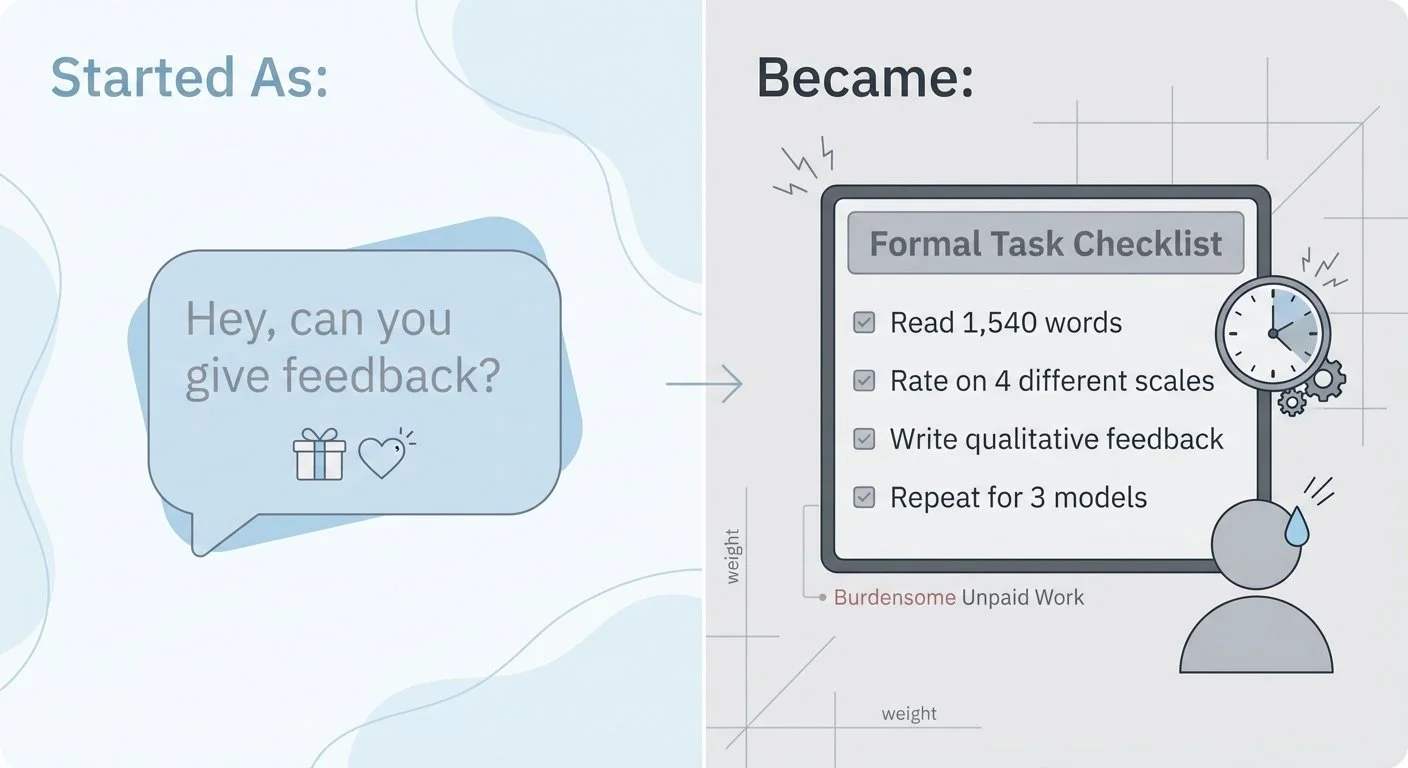

The Bart Test - Part 4: When My Teen Judges Ghosted Me

I tested GPT-5.2, Claude Opus 4.5, and Gemini 3 Pro with the baseline prompt. The [outputs](https://github.com/bart-mosaicmeshai/bart-test/tree/main/results/03_experiment_runs) were ready. I sent the first story ([GPT's 1,540-word epic](https://github.com/bart-mosaicmeshai/bart-test/blob/main/results/03_experiment_runs/03a_gpt5.2_baseline_20251218_202909.json)) to my kids via text.

No response.

I waited a few days. Still nothing.

A week passed. They weren't being difficult. They just... didn't respond.

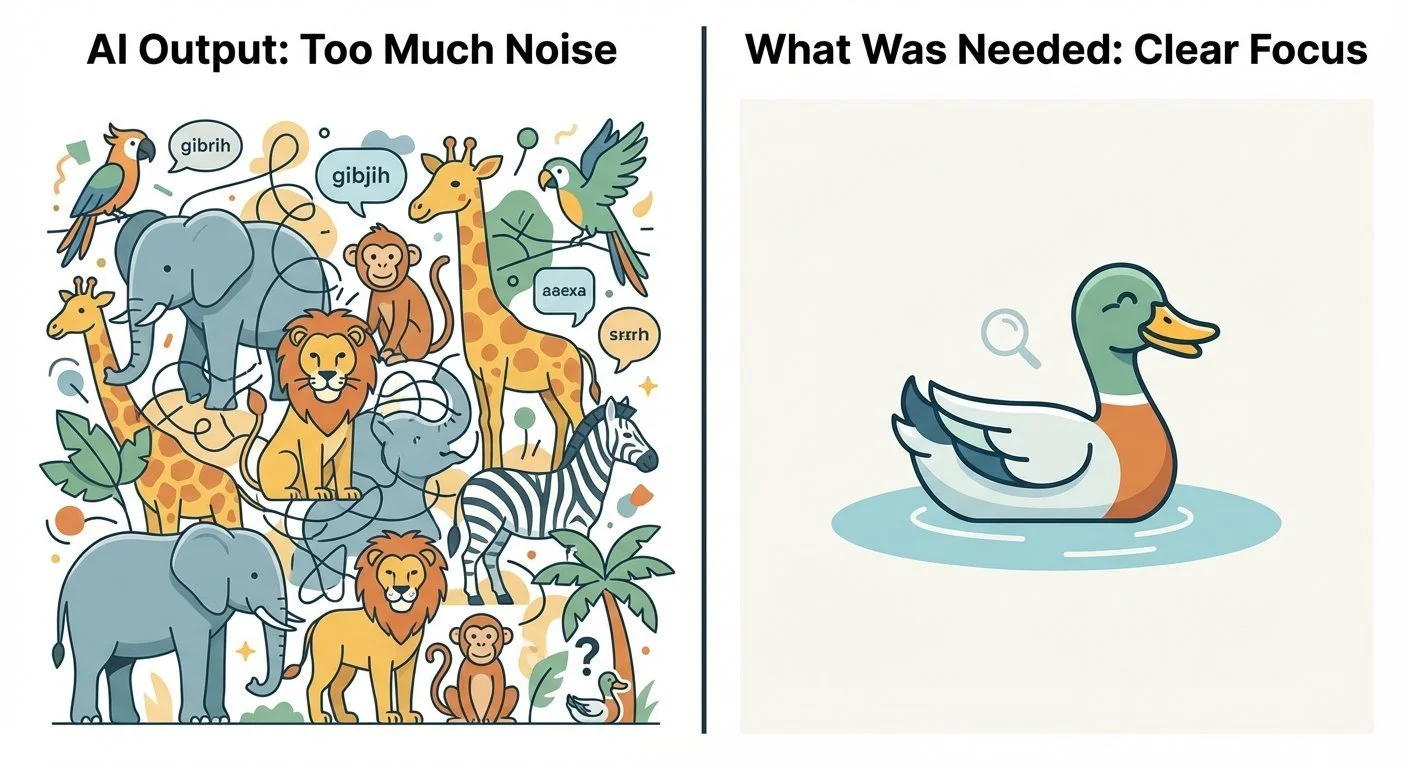

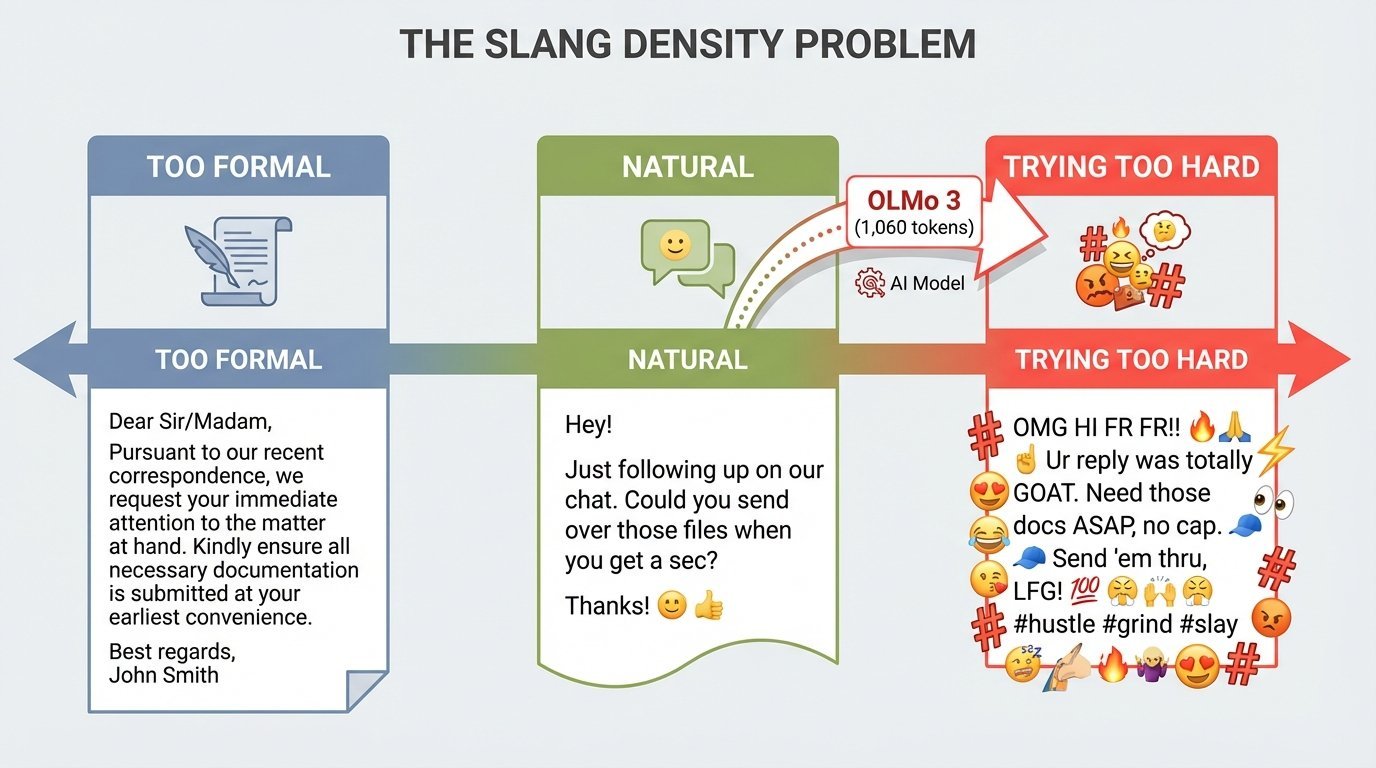

The Bart Test - Part 3: The Zoo-Not-Duck Problem

When I asked what made the AI output feel unnatural, Teen #1 said:

> "Just didn't seem like very effective communication. It's like if you are trying to paint a picture of a duck and you paint a picture of a zoo with a tiny duck exhibit in the corner. Too much noise."

This metaphor captured the core problem.

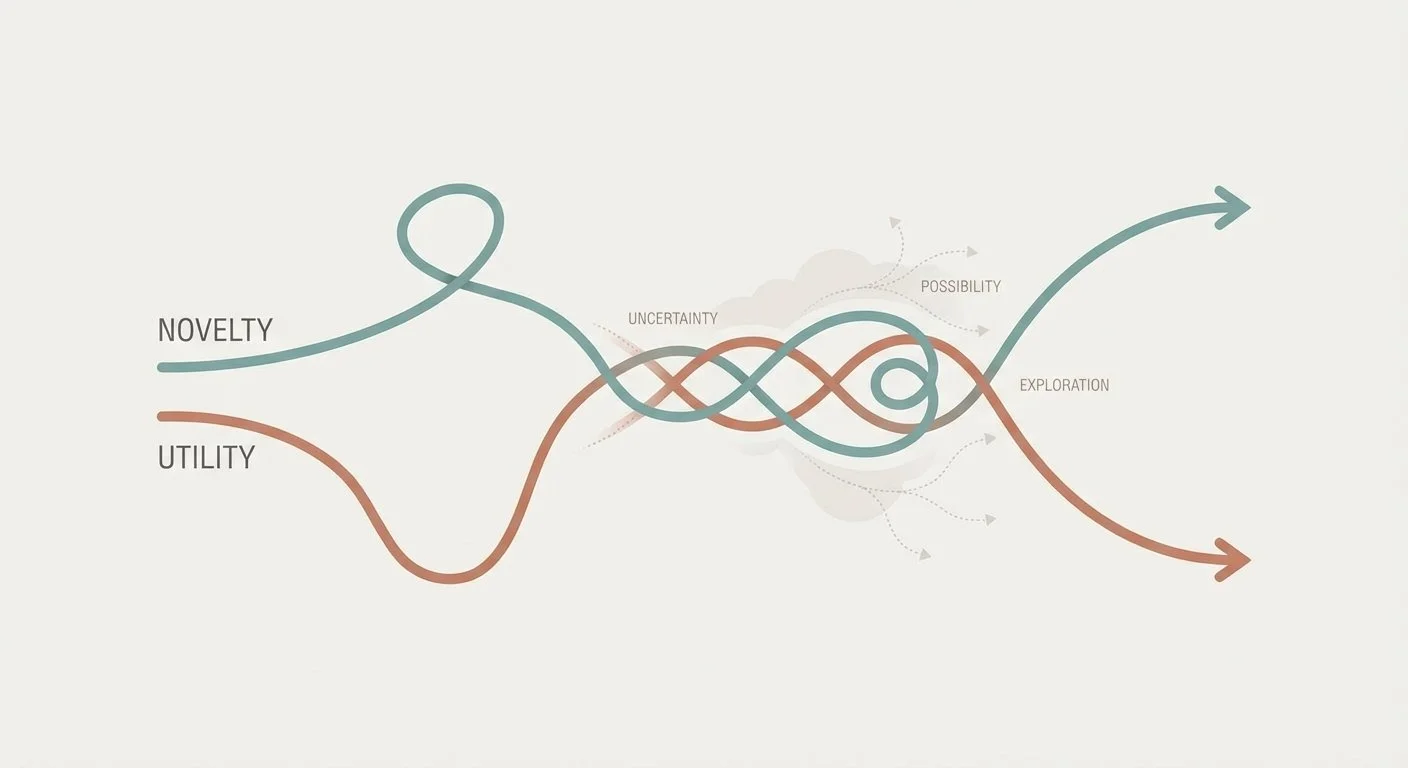

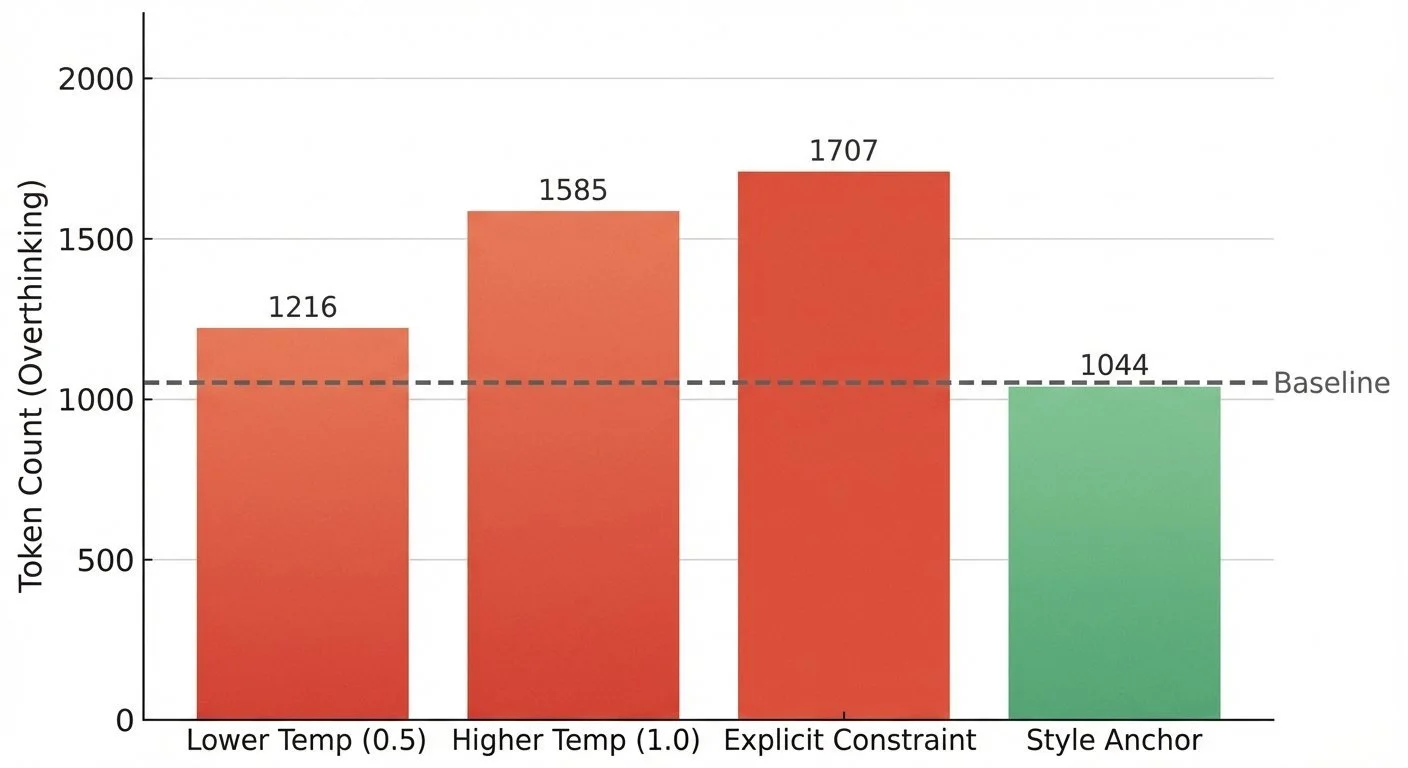

The Bart Test - Part 2: Testing the Overthinking Hypothesis

After seeing OLMo 3 overthink Gen-Alpha slang (scores of 4-5/10), I wondered: can I tune this to reduce over-thinking? If the model is trying too hard, maybe I could adjust parameters or prompts to make it more natural.

Spoiler: Both directions made it worse.

The Bart Test - Part 1: When AI Does Its Homework Too Well

I asked my teenagers to judge an AI's attempt at Gen-Alpha slang.

Teen #1: "It's definitely AI... a little too much." Score: 4/10.

Teen #2: "It sounds like my ELA project where we had to use as much slang as possible." Score: 6/10 (if a teen wrote it), 2/10 (if an adult did).

The AI did its homework. That's the problem.

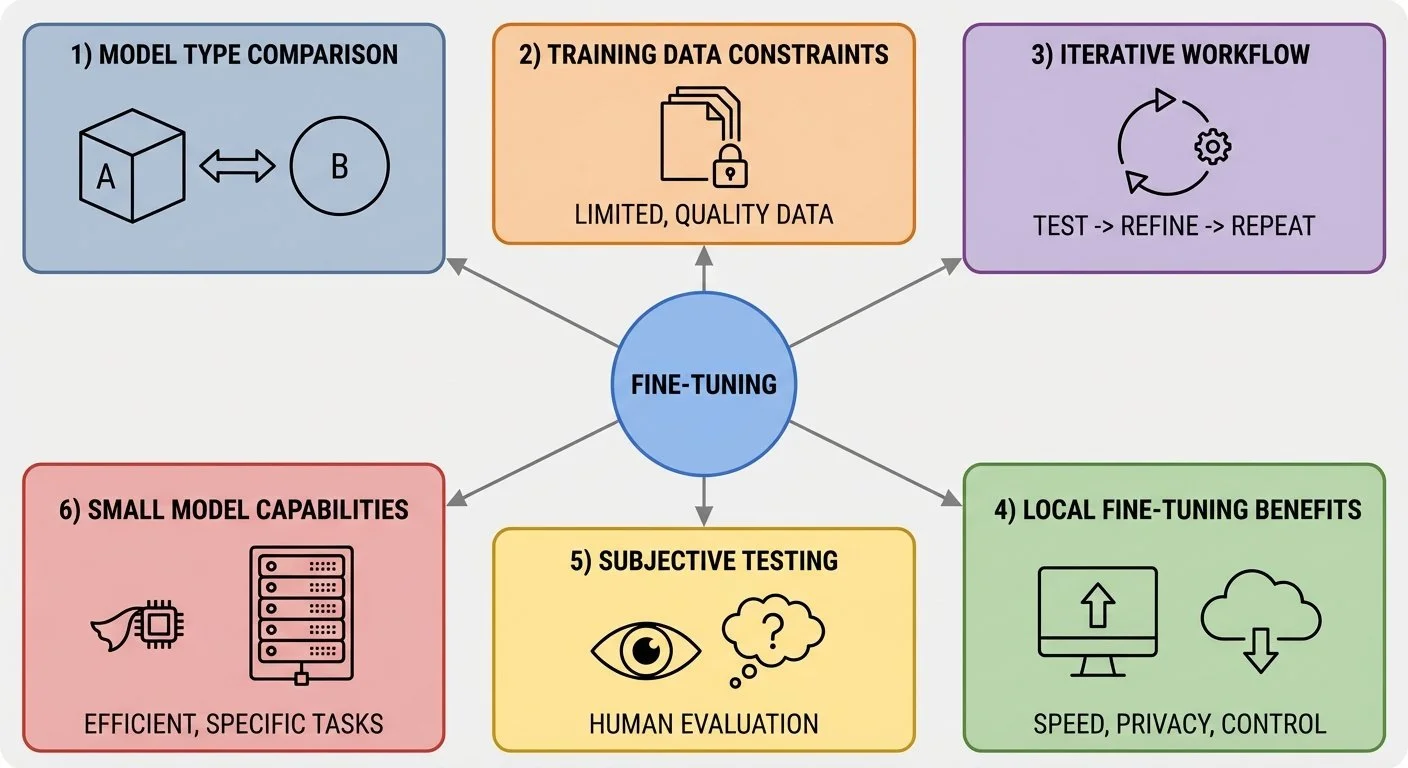

Fine-Tuning Gemma for Personality - Part 8: Lessons Learned

Eight posts about teaching an AI to talk like a cartoon dog. Here's what actually mattered.

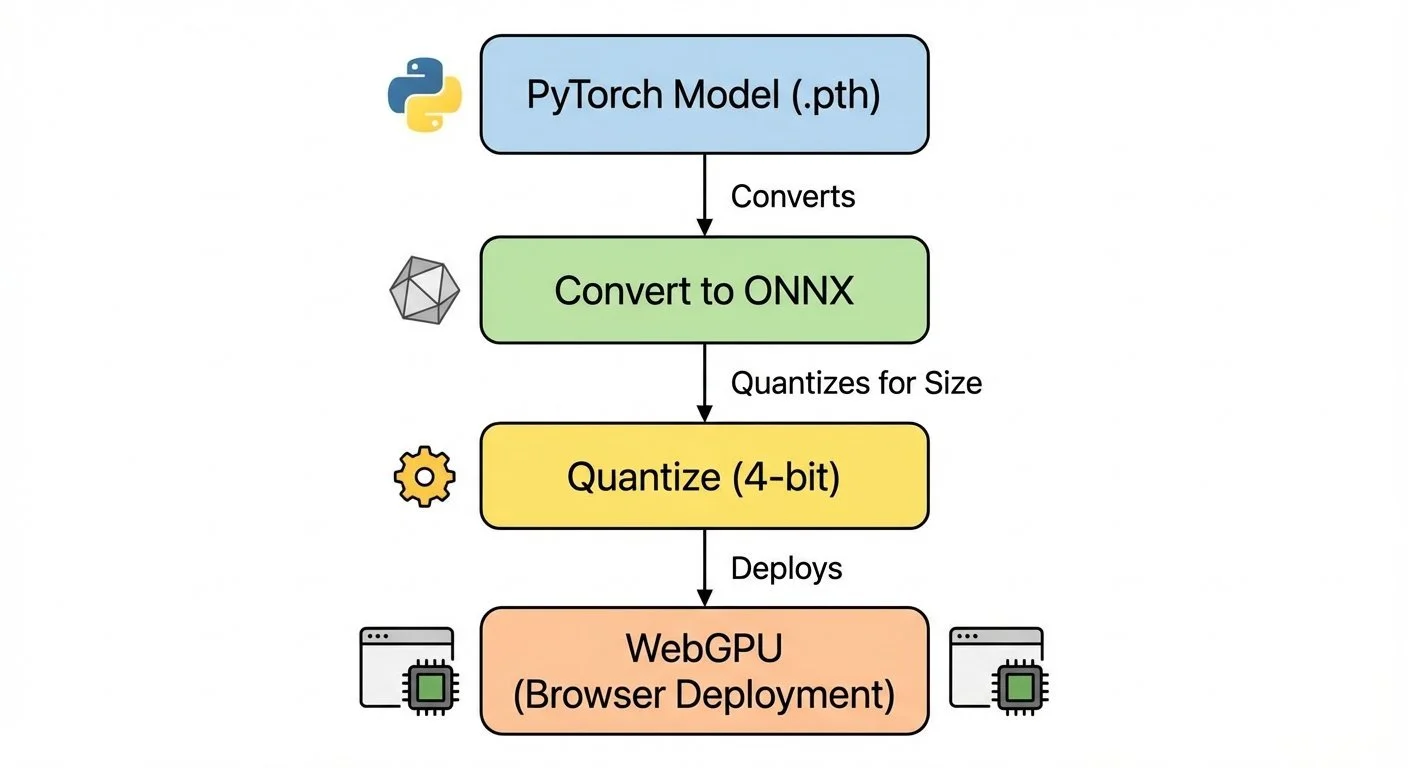

"Fine-Tuning Gemma for Personality - Part 7: From PyTorch to Browser

Browser-based inference with a fine-tuned model. No backend server, no API keys, no monthly fees. Just client-side WebGPU running entirely on your device.

Fine-Tuning Gemma for Personality - Part 6: Testing Personality (Not Just Accuracy)

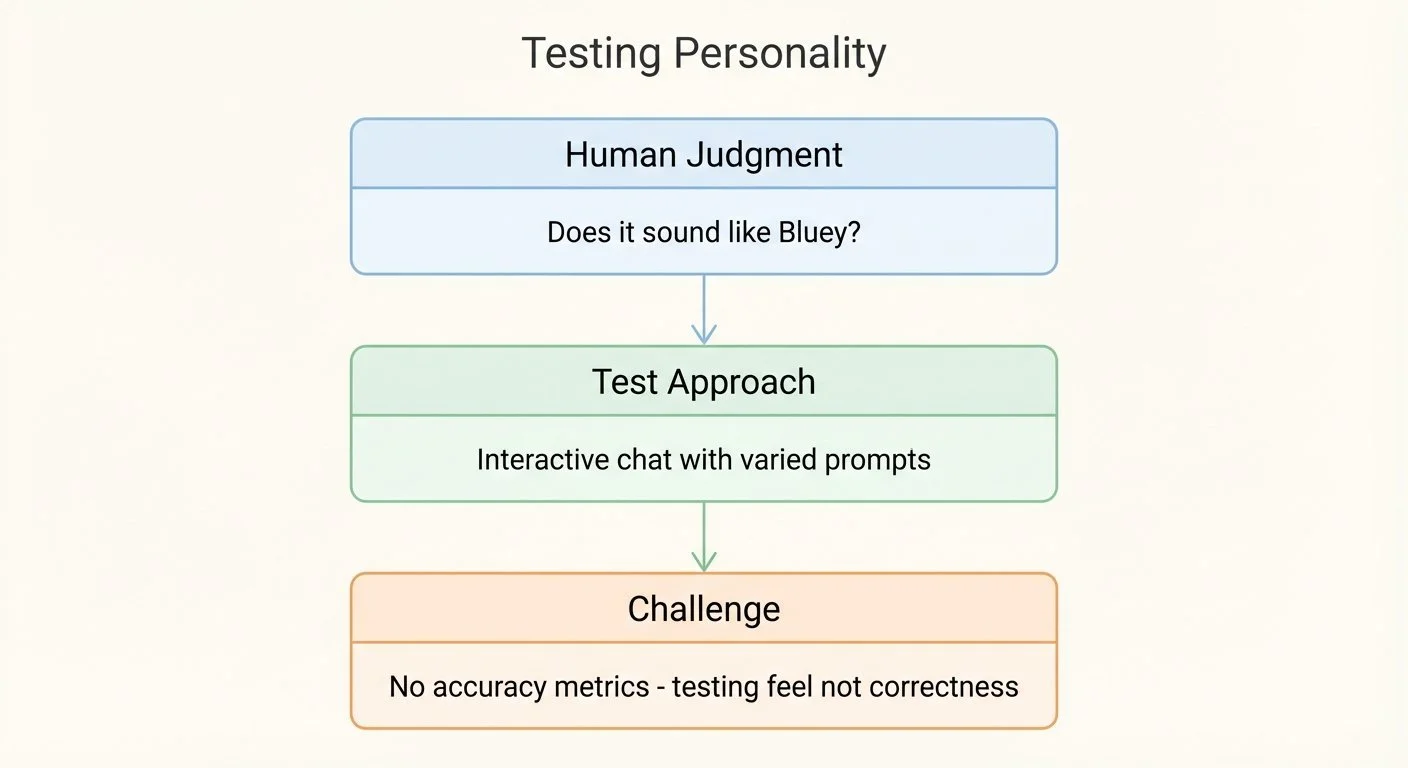

How do you test if an AI sounds like a personified 6-year-old dog? You can't unit test personality. There's no accuracy metric for "sounds like Bluey."

Fine-Tuning Gemma for Personality - Part 5: Base Models vs Instruction-Tuned

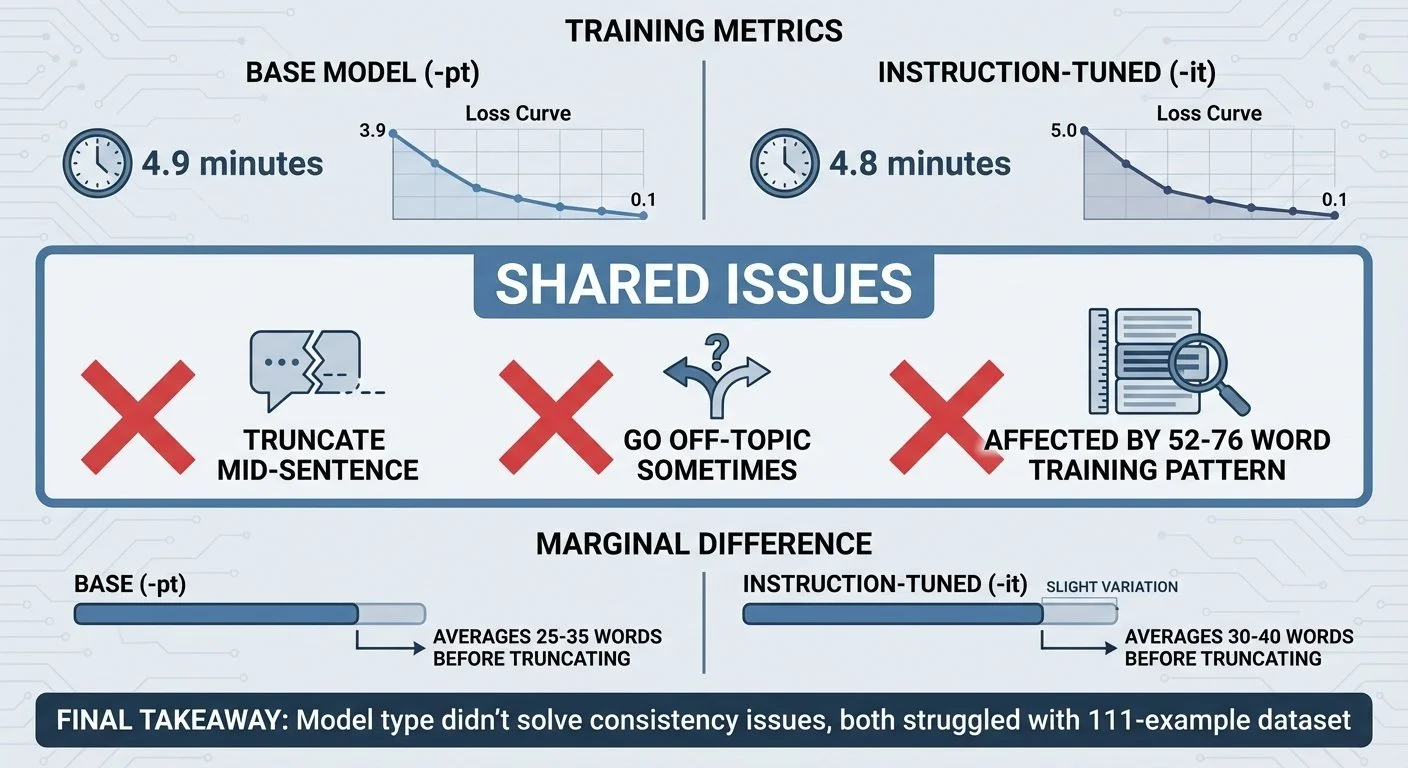

Same training data. Same hardware. Same 5 minutes. I tested both base (-pt) and instruction-tuned (-it) models to see if one would handle personality better. Both struggled with consistency.

Fine-Tuning Gemma for Personality - Part 4: When Your Model Learns Too Well

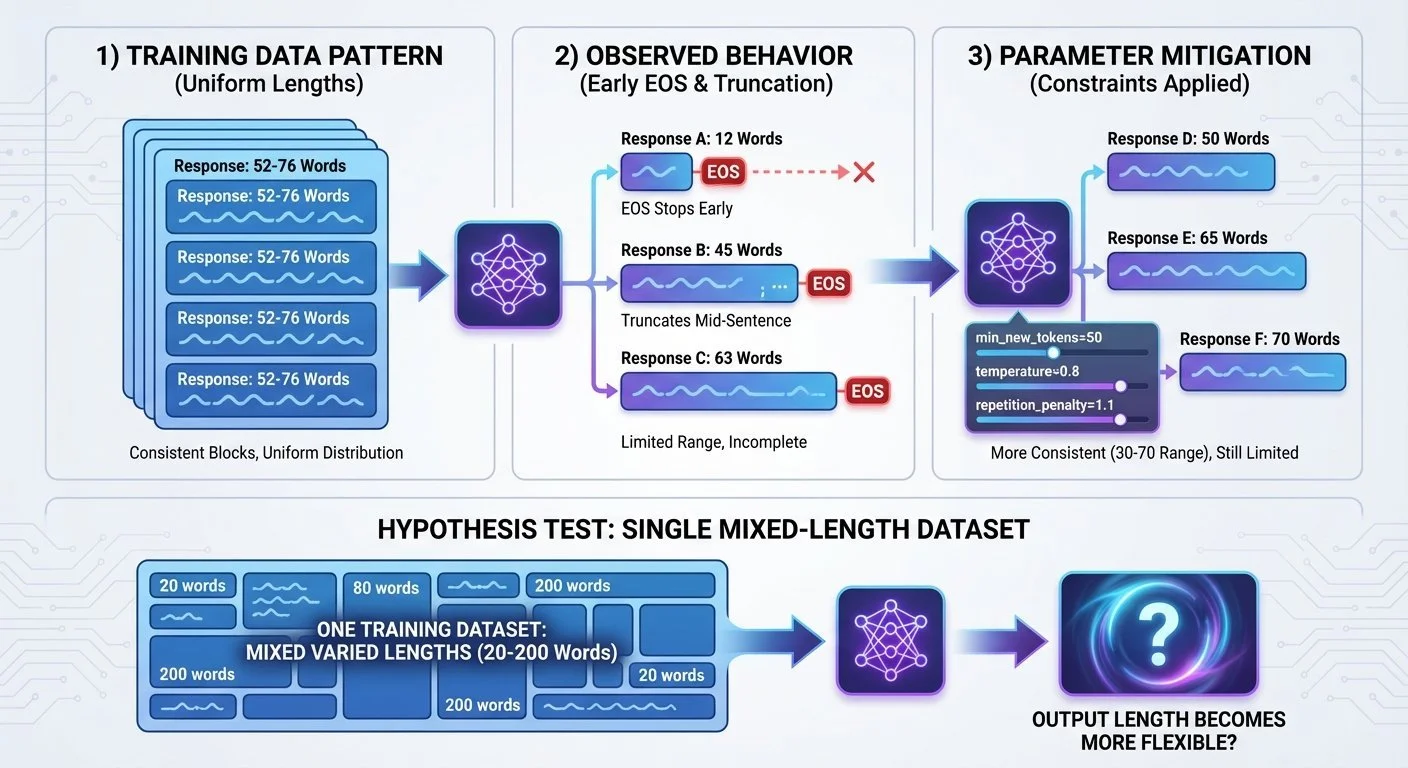

The model reproduced Bluey's speech patterns. Then it stopped generating after 76 words. The training data's average response length may have become a constraint.

Fine-Tuning Gemma for Personality - Part 3: Training on Apple Silicon

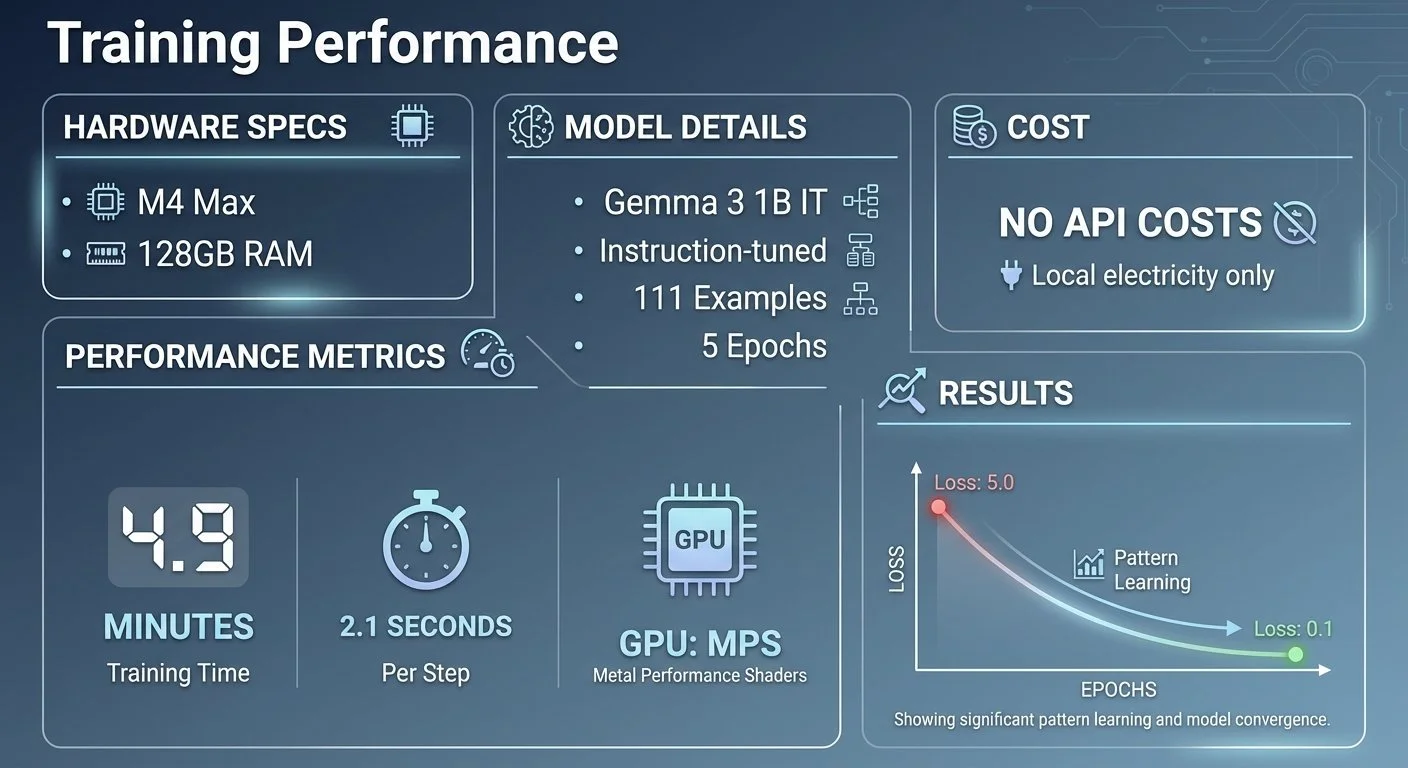

Five minutes and no API costs to fine-tune a 1 billion parameter language model on my laptop. No cloud GPUs, no monthly fees, no time limits. Just Apple Silicon and PyTorch.

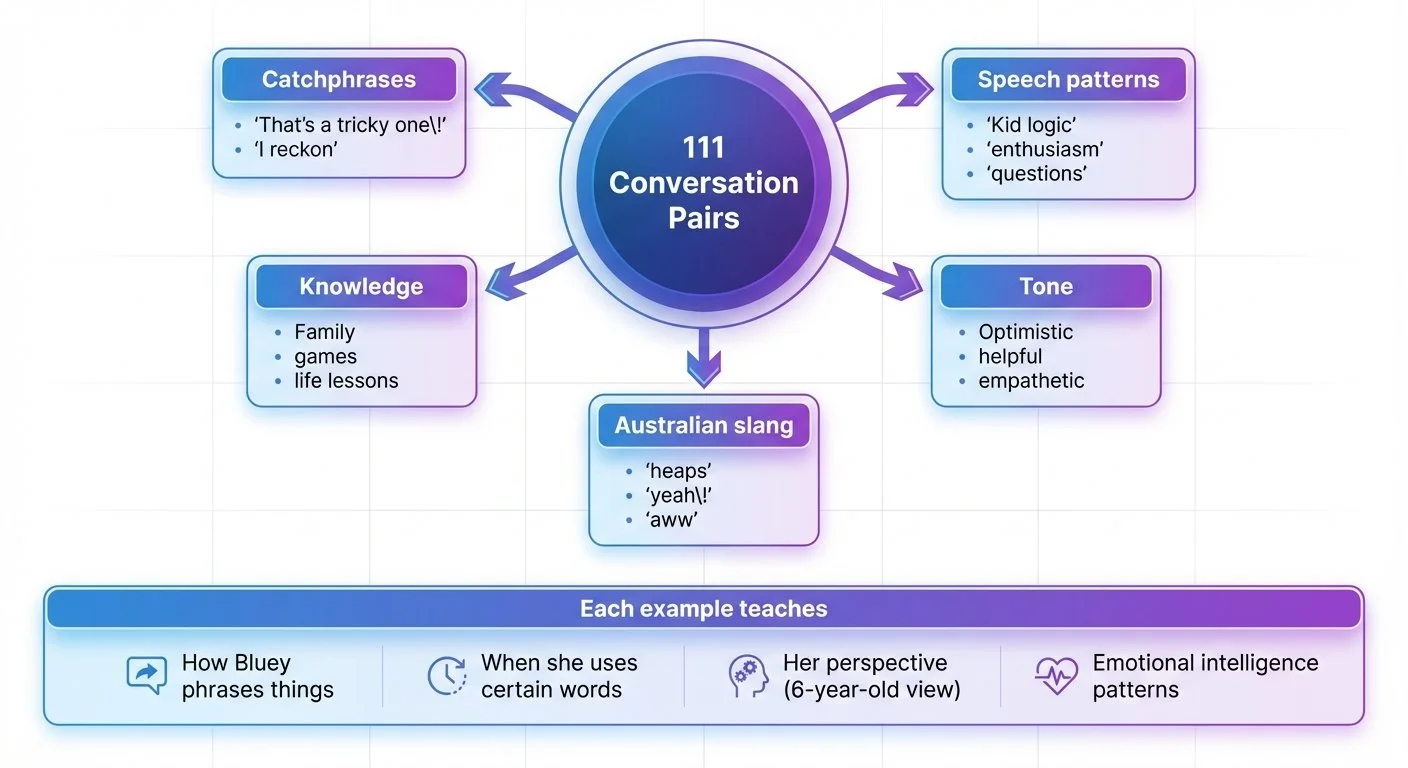

Fine-Tuning Gemma for Personality - Part 2: Building the Training Dataset

One hundred eleven conversations. That's what it took to demonstrate personality-style learning. Not thousands—just 111 AI-generated examples of how she talks, thinks, and helps.